The UK Wants Apple to Play Nanny on Nude Photos

Getty Images / Unsplash+

Getty Images / Unsplash+

Toggle Dark Mode

Several US states have recently passed laws requiring Apple to verify the ages of its users to ensure that minors can’t download age-restricted apps from the App Store. However, the UK appears to be working on a plan to take this one step further.

So far, new legislation in states like Texas is focusing almost exclusively on the App Store, Google Play Store, and other app marketplaces. The goal of these laws is to effectively make Apple and Google the gatekeepers, verifying the ages of all their users so that individual app developers don’t need to implement their own age checks. Under these new state laws, users under 18 will need to be under parental supervision, which for Apple, means placing them in a Family Sharing group.

However, UK officials feel that this kind of age verification needs to go beyond simply restricting what apps minors can download. According to the Financial Times, the country’s Home Office is looking at having Apple and Google prevent nude photos from being captured, viewed, or shared by anyone under legal age.

Ministers want the likes of Apple and Google to incorporate nudity-detection algorithms into their device operating systems to prevent users taking photos or sharing images of genitalia unless they are verified as adults.

Financial Times

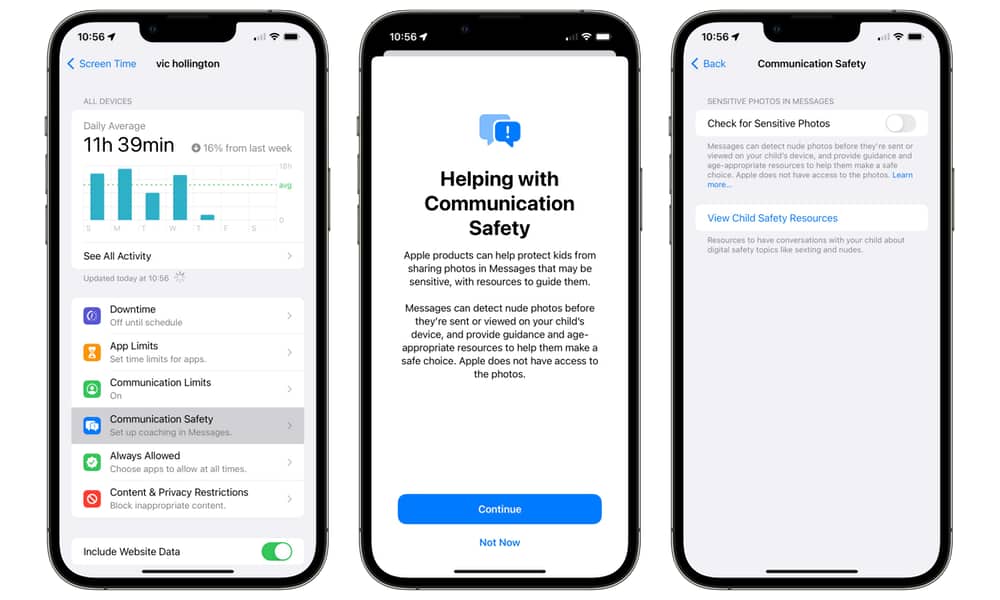

While Apple already includes the ability to block nudity in key apps like Messages, FaceTime, and even AirDrop, the feature isn’t mandatory, and it doesn’t act as a complete block. Parents have to specifically enable it for their kids, but while they can prevent kids from turning it off, the feature merely blurs nude photos and warns the user about them — it doesn’t prevent them from seeing (or sending) nude photos if they really want to.

When it was first announced in 2021, the proposed Communication Safety in Messages would have notified the parents if a child under 13 years of age chose to view or send a nude photo, but only after giving the child two warnings, the second of which expressly told them that their parents would be notified if they continued.

However, by the time the feature rolled out in iOS 15.2, those notifications had been removed as a result of concerns raised by child safety advocates that it could put children at risk from abusive parents who might not receive these notifications in an understanding or nurturing way.

The UK plan appears to go beyond anything Apple has done so far, insisting that Apple make these blocks mandatory and impossible for minors to bypass — and that it extend this across the entire operating system.

This would require Apple to not only monitor content in third-party apps but potentially implement a system-wide “kill switch” for the camera if nudity is detected without age verification.

“The Home Office wants to see operating systems that prevent any nudity being displayed on screen unless the user has verified they are an adult through methods such as biometric checks or official ID,” the Financial Times says, noting that the proposal also targets convicted child sex offenders, who “would be required to keep such blockers enabled.”

It’s unclear when or if the UK Home Office plans to make this mandatory; sources say officials have “decided against such an approach for now” in favor of simply encouraging companies to improve these controls voluntarily. Australia recently issued a similar policy encouraging companies to develop operating systems with settings for “detecting nudity and employing techniques such as blurring or warning message.”

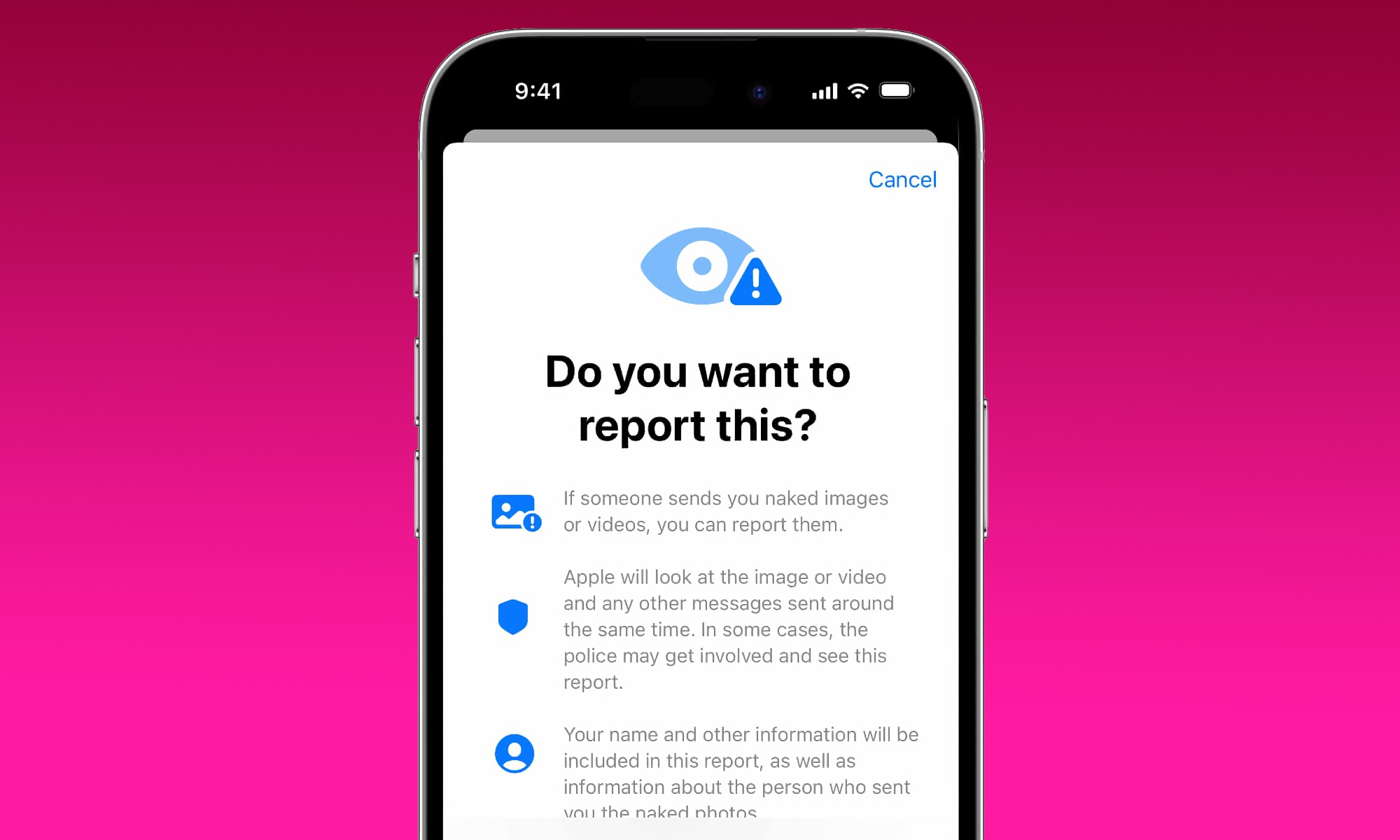

Apple is likely to make some improvements to align with the new UK requirements, as it did last year for new Australian safety laws, allowing children to report unsolicited nudes in iOS 18.2 and later. However, the iPhone maker has thus far strongly opposed laws that would force it to verify user’s ages, describing it as akin to a shopping mall requiring ID for everyone who enters just because one store inside happens to sell alcohol.

Apple has also walked a fine line over the past few years in its attempts to add child safety and protection features to its operating systems and devices. For example, Apple abandoned a controversial feature that would have securely scanned iCloud uploads for child sexual abuse material (CSAM) after privacy advocates pointed out how it could be the start of a slippery slope toward bulk surveillance by foreign regimes that could replace a CSAM matching database with something that could be used to ferret out political dissidents. However, as much opposition as there was to Apple’s CSAM detection plans from one side, the company also faces a $1.2 billion lawsuit from from a group of child safety advocates and victims of abuse who felt the company should have proceeded with the plan.

The UK Home Office proposal is likely to meet a similar divide should it become a hard and fast rule for smartphone makers. Not only will it face challenges on the basis of privacy and civil liberties, but there are also questions of how effective it will be. Apple will likely be the first to suggest that the detection algorithms aren’t precise enough to rule out false positives, resulting in a frustrating experience for younger users who may find legitimate photos blocked. Still, as with the CSAM lawsuit, those on the other side are likely to claim that a bit of inconvenience is worth enduring if it means children will be protected from grooming by adults and exposure to pornography.

Still, Apple’s most significant objection is likely to come back to the privacy angle. Its reasoning for resisting age verification laws is that it doesn’t believe it should be required to collect scans of government ID from every iPhone user, and it will undoubtedly make the same case in the UK that it’s tried (unsuccessfully) to make in states like Texas and Utah.

However, the scanning presents another potential privacy objection for Apple: performing on-device scanning of images within its own first-party apps is one thing, but complying with the UK request to block nudity from being shown on the screen from any app in a way that protects user privacy would likely require a significant re-architecting of iOS.