Apple Hit With $1.2B Lawsuit Over Dropping iCloud CSAM Scanning System

Toggle Dark Mode

Apple is now facing a $1.2 billion lawsuit over its decision to drop plans for scanning photos stored in iCloud for child sexual abuse material (CSAM), according to a report from The New York Times.

Filed on Saturday in Northern California, the lawsuit represents a potential group of 2,680 victims. It alleges that Apple’s decision to cancel the plan it proposed three years ago to implement child safety tools in iCloud has allowed harmful sexual content to continue circulating, causing harm to victims.

The lead plaintiff, who filed the suit under a pseudonym, says she continues to receive notifications from law enforcement when individuals are charged with possessing abusive images of her when she was an infant. In the lawsuit, she claims Apple’s dropping the CSAM scanning plans has led to victims being forced to relive their trauma repeatedly.

Apple spokesperson Fred Sainz responded to the lawsuit filing, emphasizing the company’s commitment to fighting child exploitation. Sainz said Apple is “urgently and actively innovating to combat these crimes without compromising the security and privacy of all our users.” Apple also pointed out its existing protective features, such as Communication Safety, which warns children about potentially inappropriate content, as examples of its continuing efforts to protect children.

In August 2021, Apple announced that iOS and iPadOS would feature new applications of cryptography designed to aid in limiting the spread of CSAM online while protecting user privacy. A new CSAM detection algorithm was expected to be included in an iOS 15 update that would scan user photos against a database of known CSAM from the US National Center for Missing and Exploited Children (NCMEC) before uploading them to iCloud. Positive matches would be stored securely until a critical threshold was reached. Once a certain number of CSAM photos were detected on a given account, they would be flagged for review by an Apple employee, who would confirm they were legitimate CSAM before notifying law enforcement.

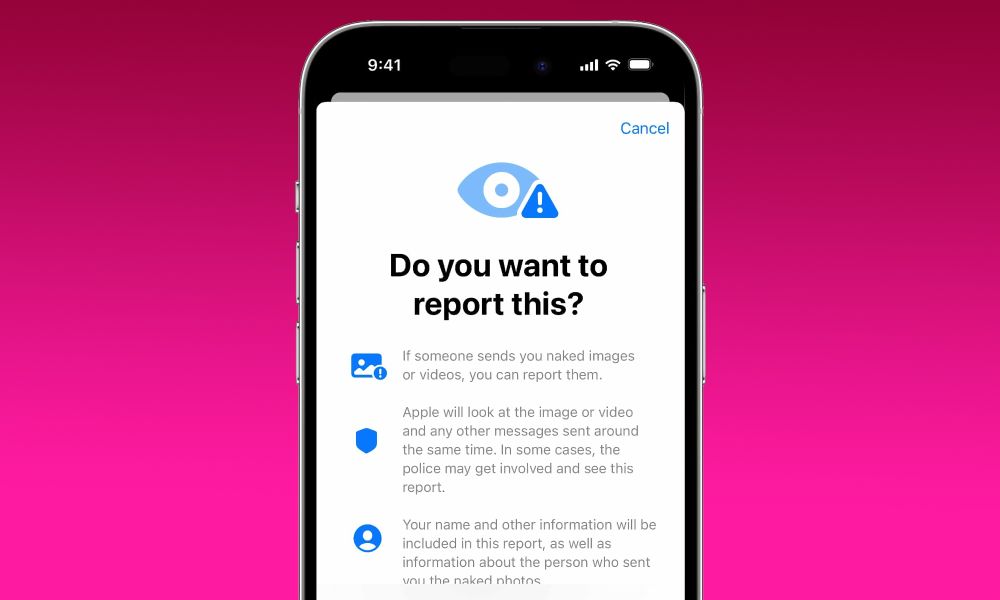

In addition to scanning for known CSAM in iCloud Photos, Apple also announced a new feature in the Messages app that would use machine learning to identify sexually explicit photos to warn parents and their offspring when receiving or sending such images. Known as Communication Safety, this feature would only be available for users set up with iCloud Family Sharing and work solely on-device.

When CSAM content was detected, the photos would be blurred, and an alert would warn the child, present them with helpful resources, and reassure them that it was okay not to view the photos if they were uncomfortable. Children under 13 would also be told that, to ensure their safety, their parents would receive an alert if they viewed the image in question. Similar precautions would be in place to warn parents if the child attempted to send sexually explicit images. The child would be warned before the photo was sent, and a message would be sent to parents if the child opted to send the picture. Children over 13 would receive the same warnings, but no parental notifications would be sent. In no case would notifications or other information ever be shared outside the family group.

Apple had initially announced the iCloud Photos CSAM detection feature would be included in an update to iOS 15 and iPadOS 15 by the end of 2021. However, the company delayed implementation of the feature due to “feedback from customers, advocacy groups, researchers, and others.”

Apple faced criticism from numerous individuals and groups, including policy groups, university researchers, the Electronic Frontier Foundation (EFF), security researchers, and politicians. An international coalition of more than 90 policy and rights groups also published an open letter to Apple, urging it to drop its plans to “build surveillance capabilities into iPhones, iPads, and other products.” The plans were also criticized internally by some Apple employees.

While the initial implementation was only planned for the US and would rely on a database of known CSAM from the NCMEC, employees and human rights activists were concerned that more oppressive regimes could force Apple to use the technology to detect other types of content, which could lead to censorship. While clever in its design, Apple’s algorithm simply matched images from whatever collection was fed into it; this could just as easily be a database of known political dissidents as CSAM. Employees were concerned that Apple would damage its well-known reputation for protecting users’ privacy.

In the end, Apple proceeded with the less controversial Communication Safety in Messages but dropped its plans for CSAM scanning, explaining that implementing this in private iCloud storage would introduce security holes that bad actors could take advantage of. The company also worried that such a system could establish a problematic precedent, as there could be pressure to use the detection methods to detect other types of content, including encrypted materials.

Communication Safety was also modified before its final implementation in response to concerns raised by domestic safety advocates that it could lead to child abuse by less understanding parents. Parental notifications were removed entirely, regardless of the child’s age; children under 13 are instead encouraged to seek help from a “trusted adult.” In iOS 17, Apple expanded the feature to allow any user to enable Sensitive Content Warnings, and it’s also reportedly working on allowing kids to report any unsolicited nudes they receive.