Apple’s Messages App Will Soon Tell Parents If Their Kids Receive Inappropriate Photos

Credit: Dedi Grigoroiu / Shutterstock

Credit: Dedi Grigoroiu / ShutterstockToggle Dark Mode

Alongside a controversial new policy that will soon have your iPhone scanning iCloud uploads for child abuse imagery, Apple announced two other initiatives geared at keeping kids safe online, both of which are of more practical interest for families with young children.

While Apple’s CSAM Detection happens entirely in the background, and is therefore something that we hope you never actually become aware of, a new communication safety feature in Apple’s Messages app will keep an eye on younger children and pre-teens to protect them from sexually explicit images.

The feature will likely arrive in a point release of iOS 15 and the rest of Apple’s major OS updates later this year, and will algorithmically scan incoming photos in Messages to identify ones that may be sensitive or harmful to children.

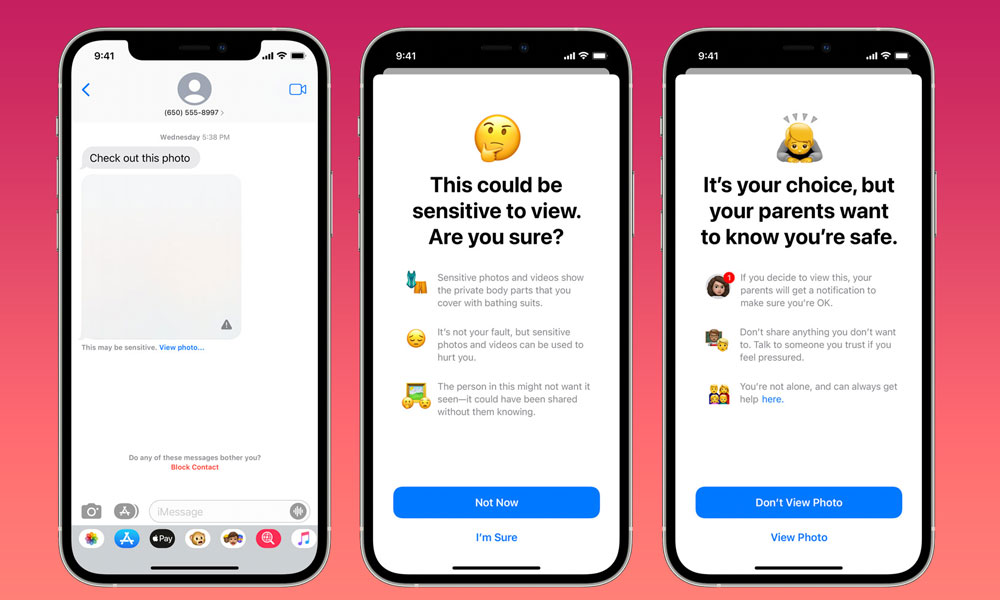

If a child receives a photo that’s flagged as such, it will be blurred out, and an age-appropriate warning will be shown, offering helpful resources and reassuring the youngster that it’s okay if they don’t want to look at the photo.

For example, the warning notes that “Sensitive photos and videos show the private body parts that you cover with bathing suits,” while reassuring the child that it’s not their fault if they received such a photo, and even adds that it could have been sent without the person knowing.

The child will still have the option of viewing the photo by tapping “I’m Sure,” but in this case, they’ll be presented with a second screen to let them know that if they do decide to proceed, their parents will be informed of this “to make sure you’re OK.”

It’s your choice, but your parents want to know you’re safe.

The screens also advise children to not share anything they don’t want to, talk to someone they trust if they feel pressured, and let them know that they’re not alone, while providing a link to some online resources if they need additional help or support.

A similar set of messages will also appear if a child attempts to send a sexually explicit photo or video. The child will be warned before the content is sent, and if they do decide to send it out, the parents will be notified.

Unlike Apple’s upcoming CSAM Detection feature, which will only compare images to a database of known child abuse imagery, the new Messages Communication Safety feature uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit.

In this case, the analysis is fair since we’re talking about individual child safety here, and not reporting people to law enforcement. Except for the parental notifications, which are also securely transmitted using iMessage’s standard end-to-end encryption, the child’s messages and shared content never leave their device.

For Families Only

While this feature has also sparked some similar controversy among privacy advocates, it’s important to keep in mind that Apple has designed this with a very narrow scope.

For one thing, Apple has not thrown the door open to on-device scanning all content that comes through the Messages app. While one could argue that this could be the beginning of a slippery slope, the machine learning algorithms in iOS have already been scanning users’ photos for years for faces and objects — all directly on Apple’s A-series chips without involving cloud servers.

This is really no different, since everything remains on the device. There is no “reporting” here, apart from notifying parents about what their kids may be up to.

While there’s obviously the possibility that Apple could someday find a way to collect that information, it could have done the same thing when it introduced photo scanning in iOS 10 five years ago.

Further, Apple has made it clear that the Communication Safety feature is designed exclusively for families — it’s coming “in an update later this year to accounts set up as families in iCloud.”

Much like other Family Setup features in iCloud, like Ask to Buy, this also only applies to children. This scanning will not occur at all for users who are over the age of 18, or even younger users who are not part of an iCloud Family Setup.

Parents will also have to opt in before the feature will be enabled, after which all users under the age of 18 will see sensitive photos appear blurred and receive warnings about sensitive content; however, parents will only be able to receive notifications when the child is under the age of 13.

Communication Safety in Messages will be added in iOS 15, iPadOS 15, watchOS 8, and macOS Monterey later this year for users in the US.

Siri and Spotlight Searches

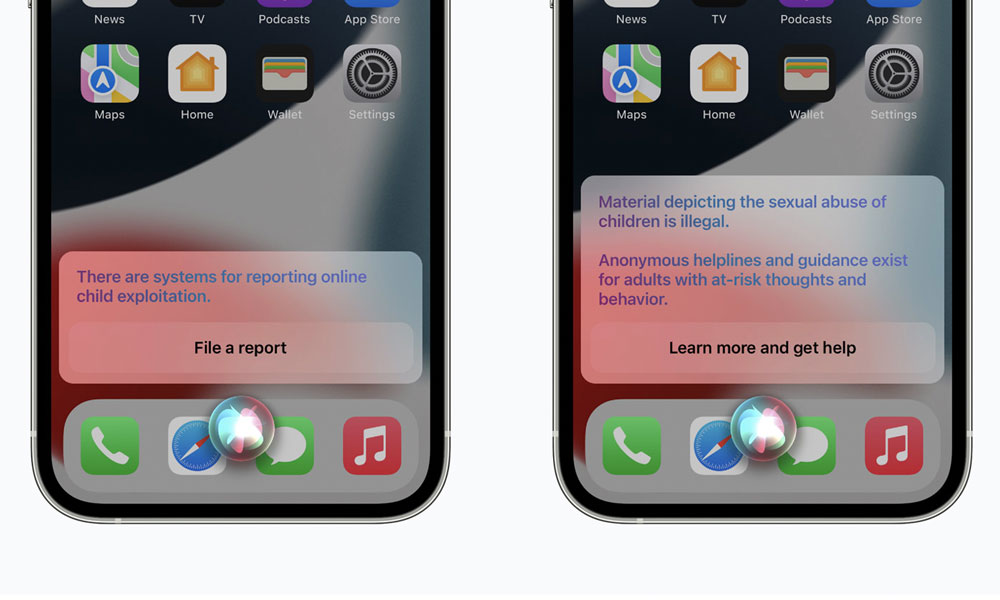

Apple is also adding some additional resources in Siri and Search to help users get guidance on how to report child exploitation, as well as intervening when users search for things related to Child Sexual Abuse Material (CSAM).

For example, Apple notes that users who ask Siri how to report CSAM will be pointed to resources that explain where and how they can file a report.

Siri will also try to intervene in the case of users who actually search for CSAM. Naturally, it’s not going to report anybody to the authorities based on a search request, but it will remind users that “material depicting the sexual abuse of children is illegal,” while pointing them to anonymous helplines “for adults with at-risk thoughts and behaviour.”

These updates to Siri and Search will also be coming later this year in an update to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey, although like the other child safety features, it will initially only be rolling out to users in the US.