Communication Safety in Messages

It appears that iOS 15.2 will indeed enable Apple’s new Communication Safety in Messages feature to help protect kids from being plagued by unwelcome and inappropriate images.

The feature appeared in an iOS 15.2 beta last month, but considering the controversy and confusion that’s surrounded Apple’s new child safety initiatives, we weren’t quite sure if Apple was going to actually release it to the public, or hold off for a bit longer.

However, since Communication Safety is the less controversial of the two proposed features, Apple clearly felt it was fine to move ahead with this, and the company has also listened to feedback from various advocacy groups, making one important change to the feature to help further protect children.

As originally proposed, the Communication Safety feature would notify parents when a child under the age of thirteen chose to view or send an explicit photo. This is no longer the case, due to fears that these notifications could put children at risk of abusive behaviour. Instead, the Communication Safety will focus on providing resources for kids who might be victims of predatory behaviour.

The feature in iOS 15.2 will still be entirely opt-in, so parents will have to enable it for their kids. It’s basically a part of the other Screen Time features, along the same lines as blocking access to apps and websites.

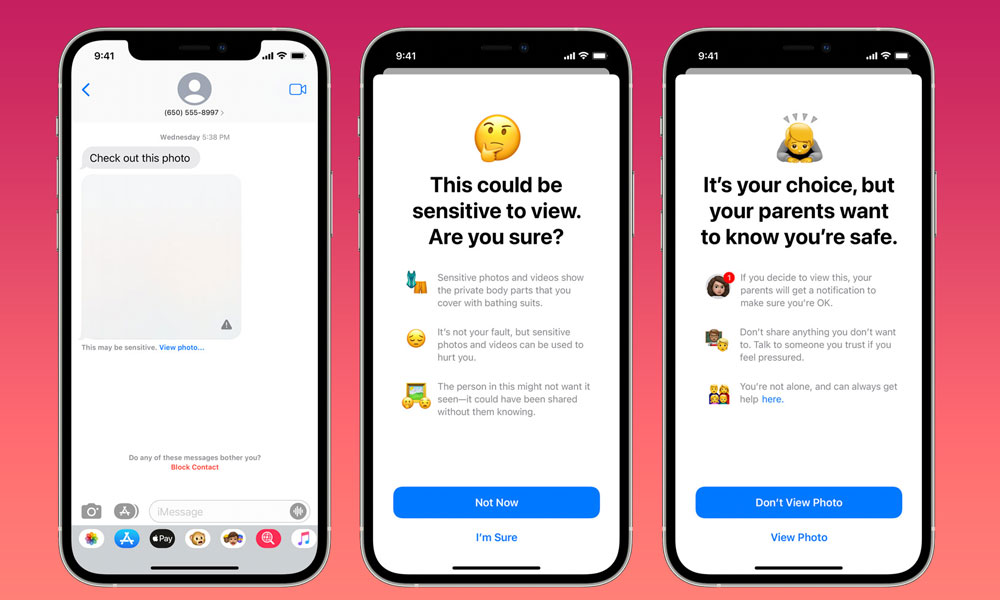

Once enabled, the iOS 15.2 Messages app will perform on-device AI scanning for images that contain nudity and blur out any that are found. Children who try to access one of these images will need to view a warning telling them that it may be unsafe and asking them to confirm that they really want to look at it. The app will also offer guidance on how a child can seek help from a trusted adult if somebody is making them uncomfortable by sending them inappropriate pictures.