Apple Explains Why It Dropped Plan to Detect CSAM in Photos

Credit: Apple

Credit: Apple

Toggle Dark Mode

This week, Apple provided a bit more information about why it pulled back on its much-criticized plans to scan iCloud Photos for Child Sexual Abuse Material (CSAM).

In a statement to Wired, Apple responded to a demand by child safety group Heat Initiative that it “detect, report, and remove” CSAM from iCloud, as well as providing more methods of reporting that type of content to the company:

“Child sexual abuse material is abhorrent and we are committed to breaking the chain of coercion and influence that makes children susceptible to it,” Erik Neuenschwander, Apple’s director of user privacy and child safety, wrote in the company’s response to Heat Initiative. He added, though, that after collaborating with an array of privacy and security researchers, digital rights groups, and child safety advocates, the company concluded that it could not proceed with development of a CSAM-scanning mechanism, even one built specifically to preserve privacy.

Scanning every user’s privately stored iCloud data would create new threat vectors for data thieves to find and exploit,” Neuenschwander wrote. “It would also inject the potential for a slippery slope of unintended consequences. Scanning for one type of content, for instance, opens the door for bulk surveillance and could create a desire to search other encrypted messaging systems across content types.”

In August 2021, Apple announced that it would be adding features in iOS 15 and iPadOS 15 that would employ new methods of cryptography to help stop the spread of CSAM online while still respecting user privacy. Apple said it would internally review any flagged CSAM and notify law enforcement when actual CSAM collections were identified in iCloud Photos.

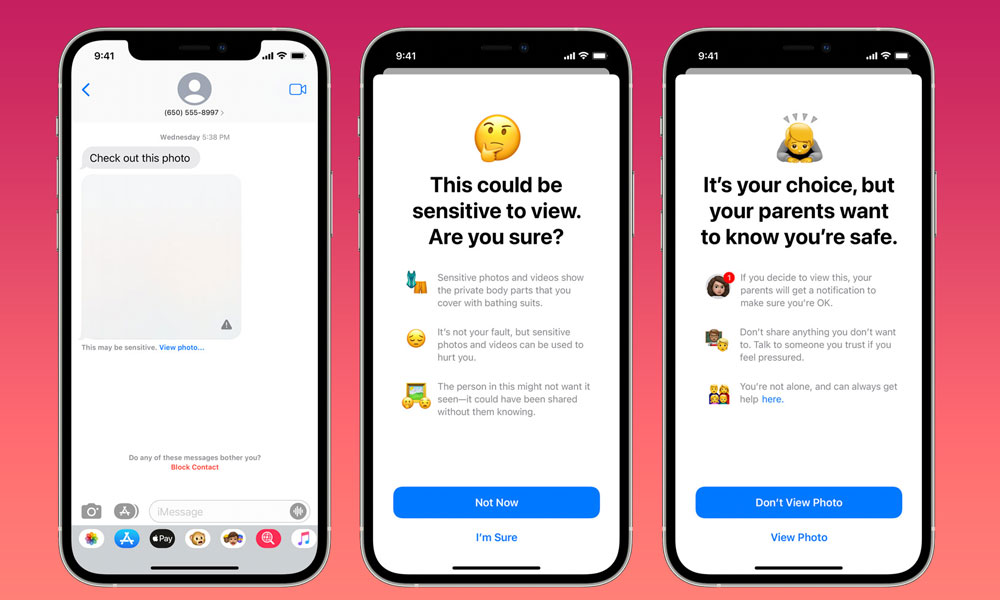

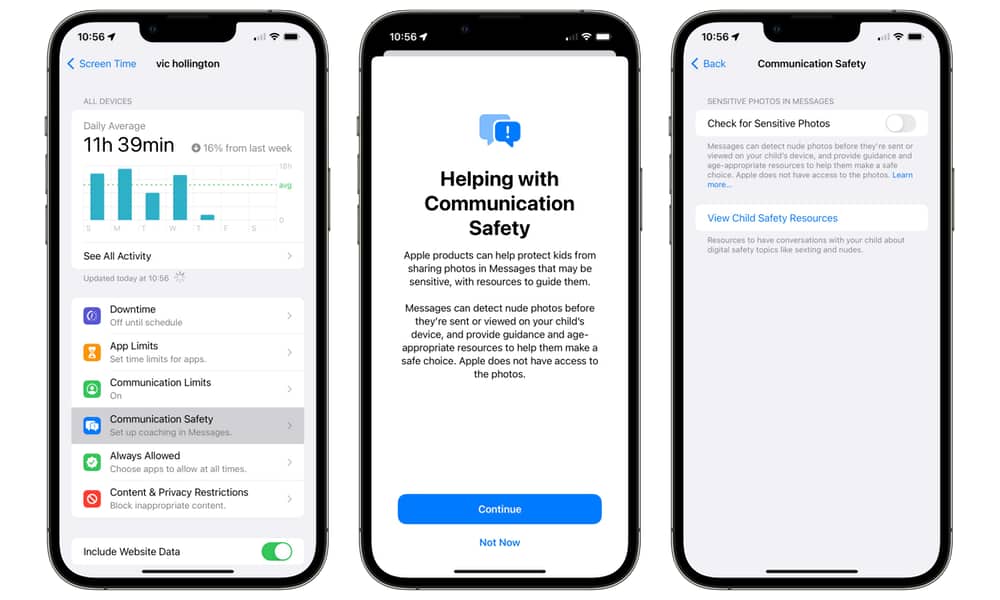

At the same time, Apple also introduced a new Communication Safety Feature in the Messages app that would offer new ways to warn children and their parents when sexually explicit photos were received or sent.

Explicit photos would be blurred, and a warning would be made to the child, who would also be presented with helpful resources. The child would also be assured that it was okay to not view this photo, and children under 13 would be advised that their parents would be notified if they did choose to view it.

Similar actions would also be available if the child attempted to send sexually explicit photos. A warning would be shown to the child before the photo was sent, and parents of children under 13 would also receive a message if the child opted to go through with sending the photo.

Although Apple had initially expected to include the CSAM detection features in an update to iOS 15 and iPadOS 15, the Cupertino firm decided to postpone the new features, based on “feedback from customers, advocacy groups, researchers, and others.”

Apple did follow through on introducing the Communication Safety features in iOS 15.2 with one important change; it removed the parental notifications after child safety advocates expressed fears that these could put children at risk from abusive parents. Children are still given a warning before viewing an explicit photo and offered guidance on how to get help from a trusted adult if they’re receiving photos that are making them uncomfortable, but parents will not be notified, regardless of the child’s age, although they still need to enable this feature on their children’s devices.

Apple’s CSAM plans were criticized by a number of individuals as well as groups, including the Electronic Frontier Foundation (EFF), university researchers, security researchers, politicians, policy groups, and even by some of Apple’s own employees, leading Apple to quietly abandon this initiative without comment — until now, that is.

Apple’s latest remarks come in the wake of recent moves by the United Kingdom government, which is mulling over whether to require tech firms to disable security and privacy features like end-to-end encryption without informing their users. Apple has warned that it will stop providing certain services, including FaceTime and iMessage to users in the UK if the legislation is passed.