Apple Shares Over a Dozen New Accessibility Features Coming in iOS 18

Toggle Dark Mode

With Global Accessibility Awareness Day (GAAD) approaching, Apple is again providing us with an early peek at some of the enhancements coming in iOS 18 and iPadOS 18.

Although we’ll have to wait for next month’s Worldwide Developers Conference (WWDC) for a complete look at iOS 18, the timing of GAAD and Apple’s desire to embrace and promote its accessibility features means that we routinely get an early look into what Apple has in store for each year’s big iPhone and iPad software releases.

For example, Apple premiered Point and Speak, Live Speech, and Personal Voice last year. In 2022, we heard about Door Detection and Apple Watch Mirroring, among other things. In 2021, Apple announced AssistiveTouch for the Apple Watch, AI-based VoiceOver descriptions, ASL interpreters for communicating with AppleCare, Made for iPhone Hearing Airs, and support for third-party eye-tracking devices in iPadOS.

Now, Apple is building that last feature directly into its operating systems, allowing users to navigate their iPhones and iPads with only their eyeballs — without the need to add third-party hardware.

Eye Tracking for iPhone and iPad

As Apple explains in its newsroom announcement, Eye Tracking uses the front-facing camera and on-device machine learning to detect what you’re looking at, with Dwell Control used to activate elements like physical buttons and gestures. As usual, all the processing for this is done directly on the iPhone or iPad and partitioned off from apps so neither Apple nor any third-party developers get to know what you’re looking at.

The concept and the privacy aspects should be familiar to anybody who has looked at Apple’s Vision Pro since its entire user interface is driven by eye and hand gestures. However, since it’s designed for users with physical disabilities, the iPhone/iPad Eye Tracking takes this a big step further by relying entirely on where you’re looking and for how long, with no hand interactions required.

Live Captions on Vision Pro

Speaking of Apple’s spatial computing headset, Apple is also introducing systemwide Live Captions a a visionOS update that will allow users who are deaf or hard of hearing to transcribe conversations from the real world into the wearer’s field of view.

In addition to conversations, Live Captions will also be available for audio apps, FaceTime, and Immersive Video experiences. When the feature is enabled, a captions overlay window will appear that can be repositioned as needed.

The Vision Pro will also gain support for Made for iPhone hearing devices and cochlear hearing processors, as well as vision accessibility improvements like Reduce Transparency, Smart Invert, and Dim Flashing Lights.

Apple Vision Pro is without a doubt the most accessible technology I’ve ever used. As someone born without hands and unable to walk, I know the world was not designed with me in mind, so it’s been incredible to see that visionOS just works. It’s a testament to the power and importance of accessible and inclusive design.Ryan Hudson-Peralta, product designer, accessibility consultant, and cofounder of Equal Accessibility LLC.

Accessibility Comes to CarPlay

Apple is also releasing one of the biggest updates to the CarPlay experience in years, bringing longstanding iPhone features like Voice Control and Sound Recognition to the dashboard.

As it does on the iPhone, Voice Control will let you navigate the CarPlay interface and open and control apps with your voice — a feature useful not only for those with physical disabilities but also drivers who are habitually prone to distraction.

CarPlay will also get Color Filters to make the UI easier to use for folks who are colorblind and Sound Recognition to help provide visual notifications of car horns and sirens for drivers or passengers who are deaf or hard of hearing.

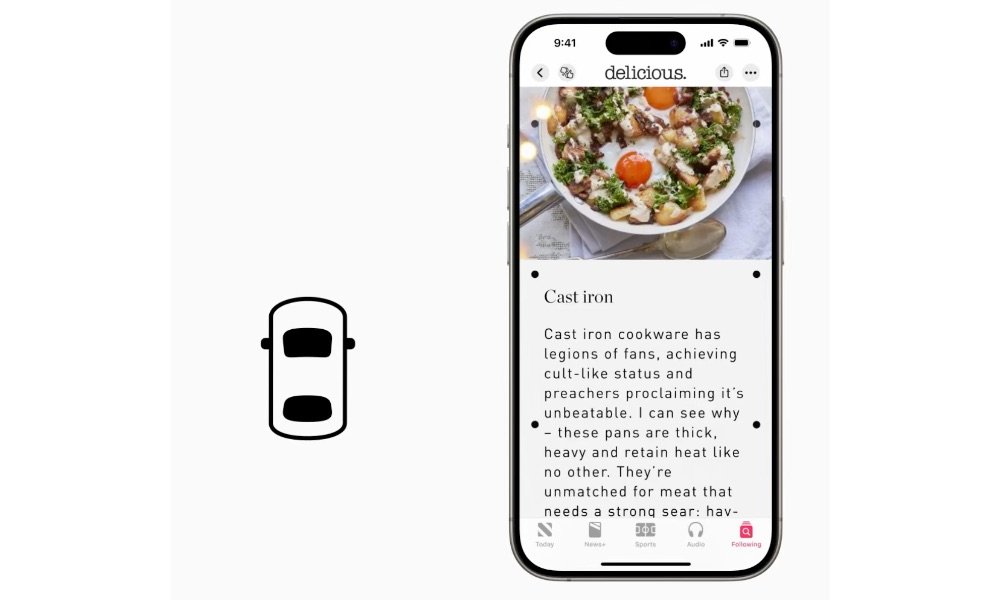

Vehicle Motion Cues

While it’s not directly tied into CarPlay, iOS/iPadOS 18 will add Vehicle Motion cues to the iPhone and iPad to help passengers who experience motion sickness while using their devices in a moving vehicle.

Research shows that motion sickness is commonly caused by a sensory conflict between what a person sees and what they feel, which can prevent some users from comfortably using iPhone or iPad while riding in a moving vehicle.Apple

When enabled, animated dots will appear on the edges of the screen, reflecting changes in vehicle motion that provide sensory cues to help reduce motion sickness. Apple says the Vehicle Motion Cues feature will recognize when you’re in a moving vehicle and respond accordingly without getting in the way when you’re sitting still or merely walking down the street. There will also be an option in Control Center to toggle it on or off manually.

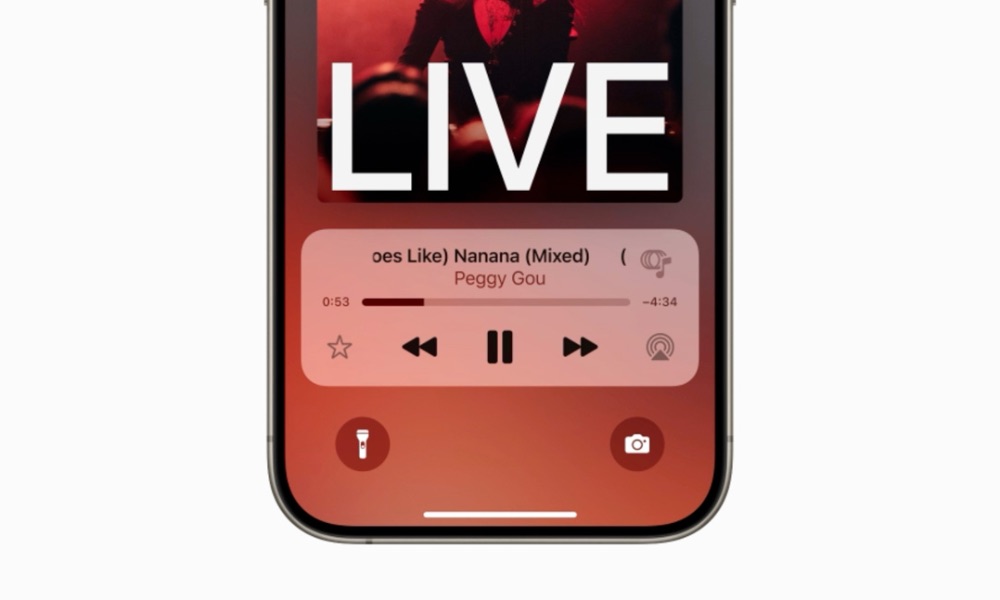

Music Haptics

Users who are deaf or hard of hearing will be able to experience Apple Music on the iPhone with a new accessibility feature that uses the Taptic Engine to let them “feel” the music.

With this accessibility feature turned on, the Taptic Engine in iPhone plays taps, textures, and refined vibrations to the audio of the music.Apple

Apple notes that Music Haptics will work “across millions of songs” on Apple Music and is also releasing an API for developers to integrate it into third-party music apps.

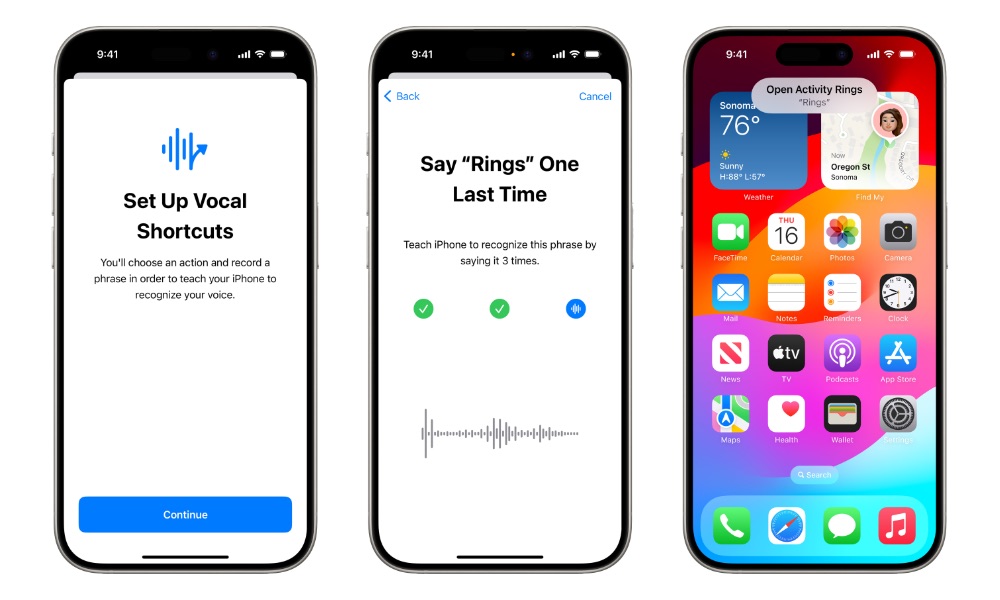

Vocal Shortcuts

While you can already customize Siri Shortcuts to respond to different phrases, it’s an imperfect experience, especially for those with atypical speech. Your iPhone “learns” your voice for “Hey Siri,” but for any other phrase, it’s just trying to piece together what you’re saying and make sense of it.

However, iOS 18 will add Vocal Shortcuts that should improve this experience for those who rely more heavily on voice prompts to control their devices. iPhone and iPad users can “assign custom utterances,” effectively training Siri to launch apps and shortcuts and complete other complex tasks.

This is joined by a new Listen for Atypical Speech feature that enhances speech recognition using on-device machine learning to recognize a specific person’s speech patterns. Apple notes that this is designed for users with “acquired or progressive conditions that affect speech, such as cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke.”

Artificial intelligence has the potential to improve speech recognition for millions of people with atypical speech, so we are thrilled that Apple is bringing these new accessibility features to consumers. The Speech Accessibility Project was designed as a broad-based, community-supported effort to help companies and universities make speech recognition more robust and effective, and Apple is among the accessibility advocates who made the Speech Accessibility Project possible.Mark Hasegawa-Johnson, principal investigator at Speech Accessibility Project at the Beckman Institute for Advanced Science and Technology at the University of Illinois Urbana-Champaign.

More New Accessibility Features

In addition to these significant new features, Apple is also refining VoiceOver with new voices and more options, adding a Reader Mode to the Magnifier app, a Hover Typing feature to show larger and more readable text when typing, a Virtual Trackpad in AssistiveTouch, and several improvements for Braille users.

Further, Personal Voice is being expanded to Mandarin Chinese, and users who have difficulty speaking will be able to train it using shortened phrases. Voice Control will also gain support for custom vocabularies and complex words. Switch Control will let people use the iPhone or iPad camera to recognize finger tap gestures as switches, eliminating the need to purchase additional hardware.

Celebrating Global Accessibility Awareness Day

As in past years, Apple is hosting a series of events and releasing new content to celebrate Global Accessibility Awareness Day.

Select Apple Store locations will be hosting free sessions to help customers “explore and discover accessibility features built into the products they love, while the App Store, Apple TV app, and Apple Books will feature apps, games, shows, and books that “promote access and inclusion for all” and invite viewers into stories that let them share in the experiences of people with disabilities.

You also don’t need to wait for all of these accessibility features; Apple has shared a Calming Sounds shortcut that you can add to your iPhone now to “play ambient soundscapes [that] minimize distractions” and help you focus or rest.