Apple Provides an Early Sneak Peek into Some Coming iOS 17 Enhancements

Credit: Apple

Credit: Apple

Toggle Dark Mode

With Apple’s Worldwide Developers Conference (WWDC) set to kick off in less than three weeks, we won’t have long to wait to find out what’s coming in iOS 17, Apple’s next major iPhone software release. However, Apple is giving us an early treat in the form of a sneak peek at some of the cool new accessibility features that iOS 17 will bring to the table.

There’s a purpose in Apple offering up this advance peek at some of iOS 17’s features. This Thursday, May 18, will mark Global Accessibility Awareness Day (GAAD), an occasion intended to “get everyone talking, thinking and learning about digital access and inclusion, and the more than One Billion people with disabilities/impairments.”

Hence, Apple is adding its voice to the chorus, sharing the efforts it’s making to facilitate inclusive access to its devices. It’s not the first time, either; Apple pre-announced a huge list of impressive new accessibility features for iOS 15 on the same occasion two years ago and then did the same for iOS 16 last year.

This time around, Apple says we can expect another list of powerful accessibility enhancements in iOS 17, plus a few other initiatives to promote accessibility within Apple’s services.

‘Making Products for Everyone’

According to Apple’s newsroom announcement, the new accessibility features cover a wide range of needs, including cognitive, vision, hearing, and mobility accessibility, as well as nonspeaking individuals.

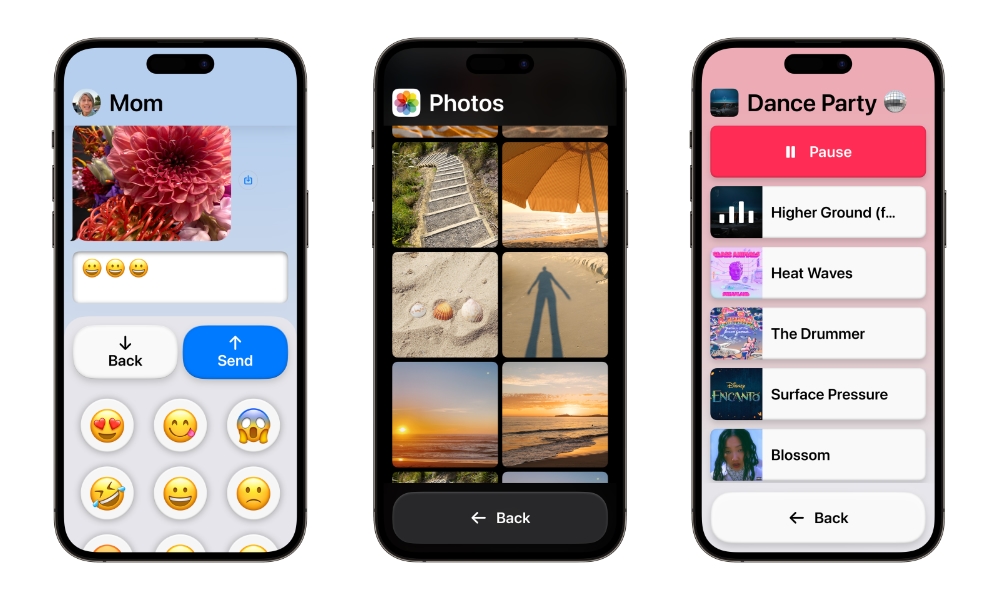

This includes Assistive Access to provide clearer user interfaces for those with cognitive disabilities, Live Speech to allow nonspeaking individuals to type to speak during conversations, Personal Voice for those at risk of losing their ability to speak, and Point and Speak in Magnifier to help folks with vision disabilities interact with physical objects that have multiple text labels.

Apple notes that the new collection of accessibility features build on “advances in hardware and software, including on-device machine learning to ensure user privacy,” while using multiple features of the iPhone in tandem.

For instance, the Point and Speak feature uses the Camera app, LiDAR Scanner, and power of Apple’s A-series Neural Engine to help users interact with devices like a microwave that may have multiple buttons and labels. As the person moves their finger across the keypad, their iPhone can identify which button their finger is pointing to and announce the text on that button. This will be built into the existing Magnifier App and can be used alongside the People Detection, Door Detection, and Image Descriptions accessibility features that Apple has added over the past few years.

Designed for folks with cognitive difficulties, Assistive Access will “distill apps and experiences to their essential features in order to lighten cognitive load.” This mode presents a simplified user interface designed based on feedback from iPhone users with cognitive disabilities and their trusted supporters to focus on core iPhone features like “connecting with loved ones, capturing and enjoying photos, and listening to music.”

In one of the more powerful applications of machine learning, Apple is also adding the ability for users to create an AI model of their own voice. Designed for those at risk of losing their ability to speak, Personal Voice records about 15 minutes of guided audio from a person and uses that to build a voice that sounds like them. This can then be used to carry on text-to-speech conversations in what is effectively their own voice rather than a synthesized one.

Since this is fundamentally an accessibility feature to help those who may eventually lose their ability to speak, it’s not likely we’ll see it applied in other ways — don’t expect Siri to start talking to you in your own voice. Instead, the user’s personal voice will form part of the Live Speech accessibility feature, which allows a person to type during a phone call, video chat, or in-person conversation and have their text spoken aloud to the other person. It’s not hard to imagine how the ability to have those messages spoken in their own voice would be an amazing feature for those who may someday be unable to speak on their own.

“At the end of the day, the most important thing is being able to communicate with friends and family,” said Philip Green, board member and ALS advocate at the Team Gleason nonprofit, who has experienced significant changes to his voice since receiving his ALS diagnosis in 2018. “If you can tell them you love them, in a voice that sounds like you, it makes all the difference in the world — and being able to create your synthetic voice on your iPhone in just 15 minutes is extraordinary.”

Additionally, Apple notes that it will soon be possible for Made for iPhone hearing devices to be paired directly to a Mac and customized in the same way they can be when using an iPhone or iPad. This will presumably arrive in macOS 14. Several other smaller accessibility improvements are coming as well, including phonetic suggestions for text editing in Voice Control, using Switch Control to create virtual game controllers for those with physical and motor disabilities, adjusting text size across Mac apps for users with low vision, and pausing images with moving elements for those who are sensitive to rapid animations.

Celebrating Global Accessibility Awareness Day

Beyond what’s coming in iOS 17 et al, Apple is also introducing several new initiatives across its services to commemorate Global Accessibility Awareness Day.

Apple’s SignTime customer assistance feature is expanding to Germany, Italy, Spain, and South Korea on May 18 to connect Apple customers with on-demand sign language interpreters. The feature is already available in the US, Canada, UK, France, Australia, and Japan.

Apple will also be offering sessions at several Apple Store locations around the world to help inform and educate customers on accessibility features. Apple Carnegie Library in Washington, DC, will also hold a Today at Apple session featuring sign language performer and interpreter Justina Miles.

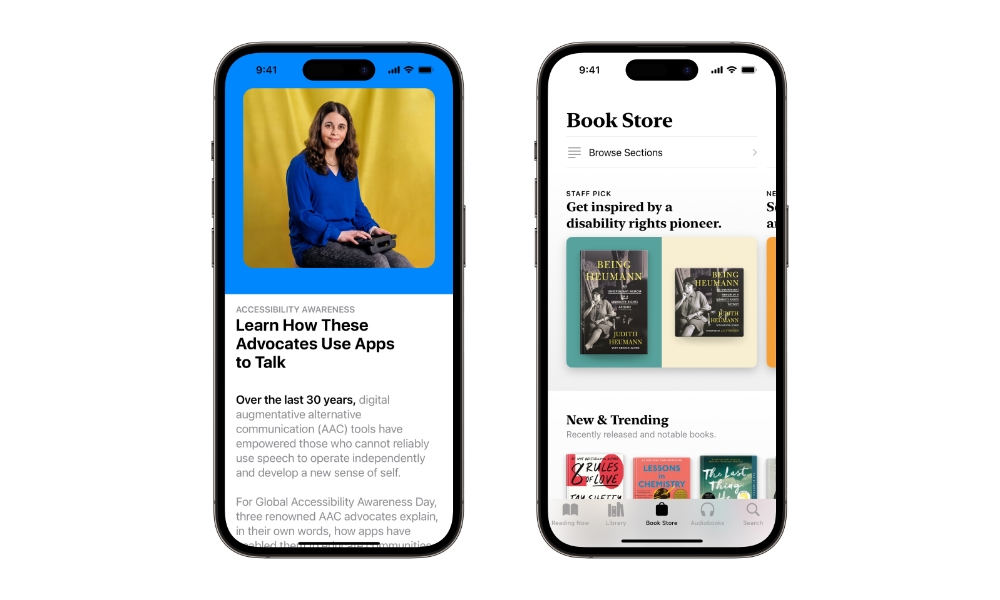

New accessibility-themed podcasts, shows, books and even workouts are coming to Apple’s services this week as well, including Being Heumann: An Unrepentant Memoir of a Disability Rights Activist in Apple Books, “movies and series curated by notable storytellers from the disability community” in the Apple TV app, and “cross-genre American Sign Language (ASL) music videos” in Apple Music. Trainer Jamie-Ray Hartshorne will also be incorporating ASL and highlighting useful accessibility features during Apple Fitness+ workouts this week, and the App Store will spotlight three disability community leaders who will share their experiences as nonspeaking individuals and the transformative effects that augmentative and alternative communication (AAC) apps have had in their lives.