Curbing Explicit Photos | iOS 15.2 Beta Adds iMessage ‘Communication Safety’ for Kids

Credit: Apple

Credit: Apple

Toggle Dark Mode

Despite announcing a delay in its plans for several new child safety initiatives, it appears that Apple is forging ahead with at least one of the new features — albeit with at least one significant modification to the original plan.

Today the company released the second beta of iOS 15.2 to developers, and while the release notes haven’t yet been updated, the latest beta does, in fact, include the new Communication Safety feature in Messages that was found in the code of the first iOS 15.2 beta.

To be clear, there’s no evidence that Apple has any plans to roll out the much more controversial CSAM Detection feature that it announced in August. This latest beta only includes the more benign Communication Safety feature, designed to give parents the option of filtering inappropriate content from their kids’ Messages apps.

Unfortunately, Apple chose to announce both of the new initiatives at the same time, resulting in a great deal of misunderstanding about how the two features worked. Many conflated CSAM Detection, which was designed to scan photos uploaded to iCloud Photos for known child sexual abuse materials, with Communication Safety, an opt-in feature for families that would help filter out sexually explicit material.

Although CSAM Detection will notify Apple if photos depicting child sexual abuse are uploaded to iCloud Photos, it only does direct comparisons of a user’s photos against a known and vetted database of CSAM images — it does not use machine learning to analyze photo content, so a picture of your toddler is not going to set it off. Further, it has nothing at all to do with Messages.

However, CSAM Detection is still completely off the table. While Apple hasn’t cancelled the plan outright, there’s no evidence that it’s going to arrive any time soon.

Communication Safety, on the other hand, does apply to Messages, and does use machine learning to identify sexually explicit photos. Not just CSAM, in this case, but any photos that may show things like nudity or inappropriate body parts. However, it only applies to messages sent and received by children under the age of 18 who are in a Family Sharing group — and the parents must explicitly opt in to enable the feature.

How Communication Safety Works

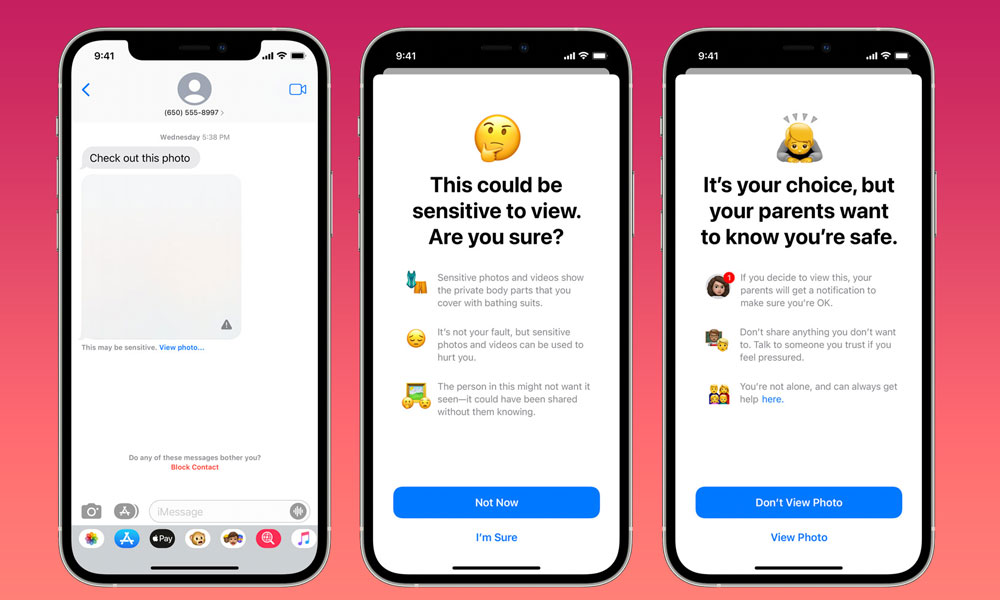

Once enabled, Communication Safety will scan any photos sent or received by a child or teen to determine if they contain sexually explicit content. If this is determined to be the case, then the photo gets blurred out with a warning letting the user know that they probably shouldn’t be looking at it or sending it.

- Family members between the ages of 13 and 17 can proceed to bypass this and look at or send the photo anyway. In this case, they simply have to note the warning, and then choose to override it. No notifications will be sent to parents for adolescents in this case.

- As originally proposed, the Communication Safety feature behaved differently for children under the age of 13. In this case, the same initial process would be followed, except that if the child chooses to view the photo despite the warning, they would receive a second warning to advise them that a notification their parents will be notified if (and only if) they choose to proceed.

The actual implementation in iOS 15.2 makes a significant change to this, however. Due to suggestions that the notification could create problems for children at risk of parental abuse, Apple has removed the notification feature of the Communication Safety feature.

Instead, sexually explicit material sent or received by children under age 13 will be treated in the same way as it is for teens — they will be warned about the photos with guidance on how they can get help from a trusted adult if they’re receiving photos that are making them uncomfortable.

Under no circumstances will the Communication Safety feature send any notifications to Apple or anybody else. All analysis happens entirely on the iPhone. This is strictly a way to protect young children from sending or receiving pictures that they really shouldn’t be.

Further, the scanning for sexually explicit photos is done entirely on the device, and none of the data ever leaves the user’s iPhone.

Understandably, when Apple announced Communication Safety and CSAM Detection together, this led many to assume the worst — that Apple would be using machine learning to flag any photo on their iPhone that looked even a bit suspicious and report it to law enforcement.

Even Apple’s software chief, Craig Federighi, candidly admitted that Apple messed up in how it communicated these features.

I grant you, in hindsight, introducing these two features at the same time was a recipe for this kind of confusion. It’s really clear a lot of messages got jumbled up pretty badly. I do believe the soundbite that got out early was, ‘oh my god, Apple is scanning my phone for images.’ This is not what is happening.

Craig Federighi, Apple Senior VP of Software Engineering

With the controversy and negative publicity, Apple announced it was delaying its plans to “take additional time over the coming months to collect input and make improvements.” In its statement at the time, Apple referred to “features intended to help protect children from predators who use communication tools to recruit and exploit them,” which clearly seems to be a reference to Communication Safety, and “limit the spread of Child Sexual Abuse Material,” which is obviously the CSAM Detection feature.

This suggested both were being delayed at the time. However, from Apple’s initial announcement, it sounded like they were never slated for iOS 15.0 in the first place. In fact, iOS 15.2 seems like the time when they would have likely landed either way.

Based on the changes made in Communication Safety, it’s clear that Apple has listened to feedback from at least some advocacy groups, who obviously raised concerns that the parental notifications could end up creating an unsafe environment for young children. While the feature as originally proposed did make it clear that a parental notification would be sent and gave the youngster an opportunity to avoid this, Apple clearly decided it was better not to take the risk of triggering a potentially abusive parent.

Ultimately, it’s still not a sure thing that this feature will arrive in the final iOS 15.2 release. It’s not at all uncommon for Apple to add features in iOS betas that get nixed before the public release, so it’s possible the company may just be testing the waters here to see what the reaction is.