Apple Delays Plans to Implement New Child Safety Features

Credit: Apple

Credit: Apple

Toggle Dark Mode

Following a massive pushback from privacy advocates on its controversial CSAM Detection feature, it appears that Apple is now taking a step back to reevaluate how to implement these features best to satisfy some of the concerns that various groups have raised.

As a result, Apple has announced that it’s delaying the rollout of its new child safety features, including not only the aforementioned CSAM Detection but also the Communication Safety features that will help protect younger children from sexually explicit photos in the Messages app.

While the CSAM Detection feature was the more alarming of the two, Apple was also forced to admit that its choice to announce them together added confusion, leading many to conflate the two features and believe that there was more going on than was actually true.

Nonetheless, even aside from public fears, legitimate privacy advocates and security researchers expressed valid concerns with some of the underlying technology. They suggest it could create a slippery slope for abuse by foreign governments and other agencies, who could conceivably extend the system to scan for more than just CSAM.

While we firmly believe that Apple’s new features are well-intentioned — and most privacy groups seem to agree — it’s worth remembering the old saying that “the road to hell is paved with good intentions,” and this is what most of the concerns are based on.

In light of that, it’s also fair to say that Apple was more than a bit naive in the way that it presented these new features. Since Apple’s executives surely have only the noblest of intentions here, they assumed that the public would take them at face value. It was the same mistake Apple made with the ‘Batterygate’ fiasco a few years ago.

However, even though Apple may not always be good at reading the room initially, it knows when it’s time to pause and take a breath. As a result, it’s officially announced that it will be delaying the new child safety features.

Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

Since these features were already slated for a point release sometime after iOS 15.0 launches next month, it’s hard to say exactly what this means. Unfortunately, Apple never said exactly when the features were set to arrive in the first place, and the new announcement doesn’t really add anything other than to say that they’re coming later than they originally were.

Child Safety Features

For those who still aren’t quite up to speed on what Apple was originally planning to do, there were three features that the company announced earlier this month under the heading of “Expanded Protections for Children.”

- CSAM Detection, where “CSAM” stands for “Child Sexual Abuse Materials,” would have scanned photos on your device before uploading them to iCloud Photo Library to determine if they matched any images from a database of known CSAM provided by the National Centre for Missing and Exploited Children (NCMEC). These images would only be available for review once they reached a certain threshold of images for a given account. Once this happened, they’d be reviewed by humans at Apple to prevent the potential for false reports. This feature did not perform machine learning or analysis — it was a simple algorithm that matched images against those already known to be CSAM.

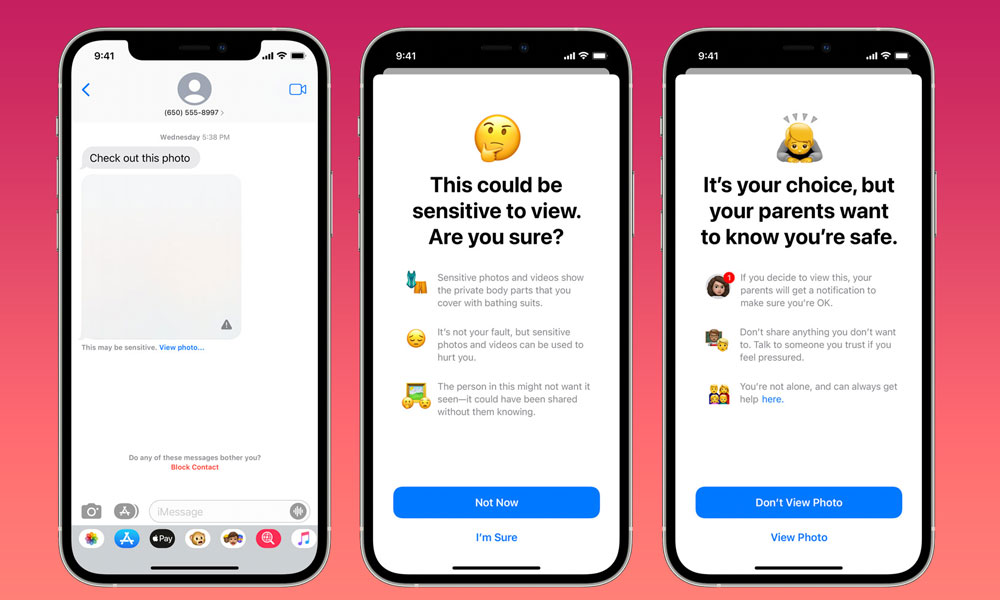

- Communication Safety in Messages was a completely separate feature intended to help protect children from sexually explicit images. This feature was to implement machine learning to attempt to identify when a sexually explicit photo was sent or received by someone under the age of 18. The photo would be blurred out by default, with age-appropriate warnings shown. If a child under the age of 13 attempted to view the photo despite these warnings, their parents would be notified. This feature was only available for children under the age of 18 in a Family Sharing group and would have needed to be enabled by the parents. No information was ever to be shared with Apple.

- Additional Resources in Siri and Search were the most innocuous of the three initiatives since it focused entirely on adding new guidance on how to report child exploitation and also intervene if users attempted actually to use Siri or Spotlight to search for CSAM. It was not intended to report any of this information to Apple but merely to remind users that they could be searching for illegal material.

While the third feature is uncontroversial, it’s understandable how mixing the first two in a single announcement led to some confusion. For instance, many believed that the CSAM Detection was occurring in Messages, when in fact, it was only happening in the Photos app, and only for those messages being uploaded to iCloud Photo Library.

In fact, contrary to some fears that Apple’s new features would be scanning everything on their devices, photos weren’t to be scanned for CSAM at all unless they were being uploaded to iCloud. This means that turning iCloud Photo Library off would also disable the CSAM Detection system entirely.

Even in the midst of the hyperbole, however, there were legitimate security concerns raised by researchers and privacy advocates, from Edward Snowden to the Electronic Frontier Foundation, who felt that Apple might have been opening a Pandora’s Box with CSAM Detection.

In a nutshell, the idea was that Apple was building a system that, while designed with purely good intentions, could still be used for evil.

The most critical factor is that the CSAM Detection is essentially content-agnostic in terms of what it searches for. Since it’s looking for photos that match a database of images, the database of CSAM could theoretically be swapped out for a database of any other set of images, such as those of political dissidents.

To be clear, these would still have to be known images — nothing in Apple’s CSAM Detection system is designed to analyze image content — but it’s still not uncommon for many protestors and other activists to download and share memes and other key photos among themselves.

For Apple’s part, it insisted that it would not allow this to happen, sharing that the CSAM database developed by two agencies from independent sovereign governments that focus on child exploitation and that the content would be regularly audited to make sure it was limited to CSAM.

Further, a human review process within Apple would also prevent anything apart from CSAM from being reported, since presumably, even if a shadowy government agency slipped some non-CSAM images into the database, Apple employees would basically ignore these as false positives.

Still, some privacy groups have raised concerns about what Apple could be compelled to do within certain countries, particularly China, where it may have no choice but to obey draconian surveillance laws if it wants to continue operating its vast supply chain within the country.

Apple walks a fine and dangerous line in these countries, and it’s not exactly news that the company has complied with censorship requirements on several occasions in the past. However, there’s still a considerable gap between removing content deemed “illegal” and actively participating in surveillance operations.

Either way, it seems Apple realizes that it will have to do some more work to make sure that these concerns are addressed to everyone’s satisfaction. As was the case with Apple’s release of AirTags, it doesn’t seem that Apple did enough consultation in developing the CSAM feature, so it’s going to take some time to collect the necessary input and hopefully come up with a more palatable solution — and a better way of proactively communicating what it’s trying to accomplish and how it’s going to work.