Apple May Bring an AI Transcription Assistant to iOS 18

Credit: Krakenimages.com / Shutterstock

Credit: Krakenimages.com / Shutterstock

Toggle Dark Mode

It’s almost a given that Apple will unveil some exciting new AI features for the iPhone and iPad when iOS 18 and iPadOS 18 debut at next month’s Worldwide Developers Conference (WWDC).

However, even though we’ve been hearing about Apple’s AI ambitions for several months now, those have been mostly in broader terms, and it’s been hard to separate the facts from the speculation to nail down precisely what Apple has planned.

That’s partly because Apple holds its cards very close to its vest, but it’s also likely that it’s taken some time for the company to figure out what it can actually pull off at this stage. Generative AI covers a lot of ground, from creating and editing photos to summarizing notes and powering conversational voice assistants.

For example, it’s a safe bet that we’ll see some improvements to Siri, if only because the voice assistant has fallen behind. However, there’s also been evidence that Apple has been working behind the scenes to build a better Siri since at least 2019, shortly after it hired Google’s former head of AI to lead things. If things have been quiet on the Siri front, that’s only because Apple is playing the long game.

There’s also the matter of how much can be done on-device using Apple’s powerful A-series and M-series chips. That’s how Apple prefers to do things, both for privacy reasons and to show off the power of its silicon, but it’s undoubtedly going to need to rely on server-side processing for more complex tasks, and it’s reportedly already building M2 Ultra-powered AI servers to handle the demands.

AI Transcription

Significant improvements to Siri will undoubtedly be welcome, but the good news is that Apple appears to have more up its sleeve. A new report from AppleInsider cites sources who have revealed that Apple is working on a way to deliver real-time audio transcription and summaries as a core system service, much the same way Siri Dictation works now.

People familiar with the matter have told us that Apple has been working on AI-powered summarization and greatly enhanced audio transcription for several of its next-gen operating systems. The new features are expected to enable significant improvements in efficiency for users of its staple Notes, Voice Memos, and other apps.Marko Zivkovic, Associate Editor for AppleInsider

An updated version of the Voice Memos app reportedly being tested by Apple is capable of showing a running transcript of audio recordings that appear in place of the graphic waveform shown in the iOS 17 version of the app.

The live audio transcripts are also expected to come to Apple Notes, which is already reported to include a built-in Voice Memos feature according to a report that AppleInsider shared last month.

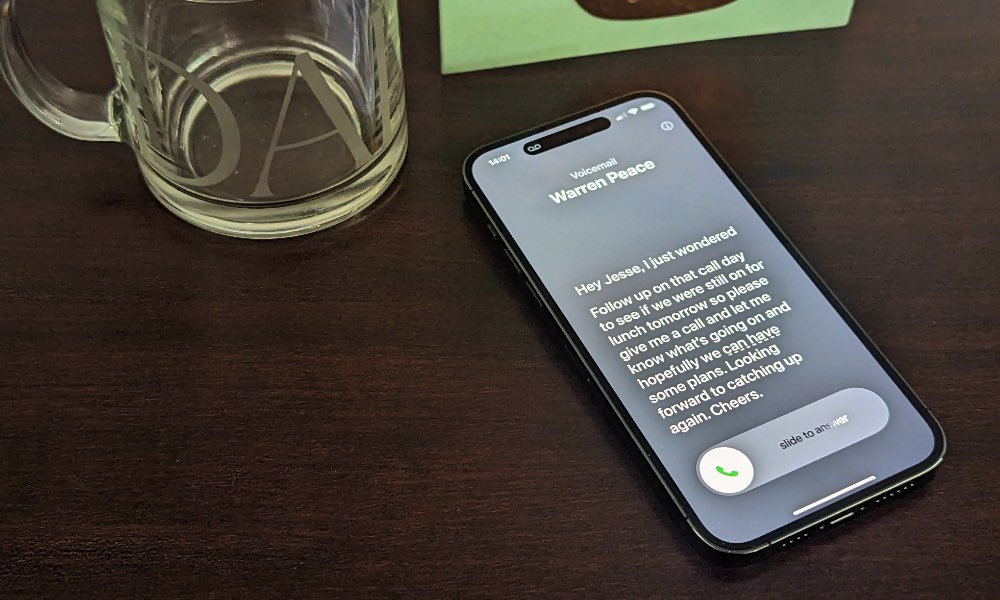

The feature’s operation in both apps is reportedly similar to the Live Voicemail capabilities that Apple introduced in iOS 17 last year. Full voice transcription seems a logical outgrowth of that, although it’s less clear whether it will be done on-device or require some help from AI servers in the cloud.

After all, Live Voicemail is far from perfect. It does a reasonably good job of deciphering what a caller is trying to say for voicemail purposes but lacks the level of accuracy that you’d want when transcribing notes. The longstanding Siri Dictation feature has similar foibles, and Apple moved it on-device and removed the time limits in iOS 15, it still isn’t ideal for handling longer transcriptions.

On the flip side, Apple added transcripts to the Podcasts app earlier this year, which are extremely accurate, but that’s because they don’t rely on any on-device processing. Transcripts are generated by Apple’s servers shortly after the podcast is uploaded. However, there’s a practical side to that beyond improved accuracy since there’s no private information in a podcast to be concerned about, and there’s also no point in wasting energy on millions of iPhones to generate the same text over and over.

However it works, the new AI assistant would not only improve transcription to allow for things like accurately recording an entire lecture but could also generate AI summaries to highlight the most important points.

Even though Apple is said to be testing these features, it’s not certain they’ll be part of iOS 18 when the first developer betas arrive in a few weeks. If server-side processing is required, they might not work until all the back-end infrastructure is in place. In that case, Apple could announce them as “coming later this year,” as it often does with significant new iOS features.

[The information provided in this article has NOT been confirmed by Apple and may be speculation. Provided details may not be factual. Take all rumors, tech or otherwise, with a grain of salt.]