WWDC25 Showed Apple at Its Best

Toggle Dark Mode

Apple’s Worldwide Developers Conference (WWDC) has concluded, and it’s been an interesting year for Apple. While what we saw this week may not have been the most exciting set of new products and features, especially compared to the past few WWDCs, this week’s event—both the Keynote and the WWDC sessions — felt like Apple returning to its roots.

The WWDC 2025 Keynote could best be described as “lean and mean.” It was a tight presentation where Apple focused almost exclusively on its six core operating systems. There were no hardware announcements to distract us, and Apple barely touched on peripheral products like the AirPods.

Following a two-minute opening scene of Craig Federighi driving a Formula One car around the circular roof of Apple Park to promote its upcoming F1 summer blockbuster, Tim Cook kicked things off with the usual brief introductory remarks. Then, he handed things over to Federighi, who helmed the rest of the presentation.

Federighi then spent just over three minutes recapping Apple Intelligence, obliquely apologizing for the Siri delay, and announcing the new Foundation Model Frameworks to allow third-party developers to tie into Apple’s on-device large language models (LLMs). That was the only part of the presentation where the word “Siri” was used or even obliquely referred to.

By seven and a half minutes in, Federighi was handing things over to design VP Alan Dye to introduce the Liquid Glass redesign, setting the stage for the rest of the software presentations. Five minutes later, we were back to Craig to begin a whirlwind tour through iOS 26, watchOS 26, tvOS 26, macOS 26, visionOS 26, and macOS 26 — in that order.

It was an unusual sequence, but it also kept things interesting. In the past, Apple has often clustered its major operating systems together, making things feel repetitive. Inserting watchOS, tvOS, and visionOS in between them was a nice change of pace. It also felt like Apple put iPadOS 26 last as a sort of “one more thing” homage, as the new multitasking and other laptop-like productivity features were easily the biggest reveal of the event.

Apple Intelligence Takes Its Proper Place

As I noted, Apple Intelligence had a much lighter presence than this year’s keynote. Some felt that made the event underwhelming, but considering how much it overpromised and underdelivered after last year’s WWDC, it’s not all that surprising that Apple is a bit gun-shy about heavily promoting new AI features.

Nevertheless, Apple didn’t ignore Apple Intelligence. Instead, it presented it where it made sense, showing new features that are more tightly integrated into the user experience and have real-world applications for everyday people. This was how Apple handled things for years before “AI” was a buzzword. Many iPhone features have relied on machine learning technologies, from face and object recognition in the iOS 10 Photos app to Live Voicemail in iOS 17.

No matter how Apple brands them, this year’s iOS 26 features like Live Translation and Workout Buddy fit into the same mold; they may not be as flashy as Genmoji, but they’re both very practical uses of Apple Intelligence.

Apple’s big “AI for the rest of us” presentation last year always felt a bit forced. It seemed like the company knew it was behind in AI and had to do “something” to show it was taking it seriously. It delivered on that impression but fell short of producing meaningful results.

Apple’s “tentpole” AI features weren’t anything we hadn’t already seen on other platforms. The one feature that showed promise — a more personal and contextually aware Siri — turned out to be vaporware (there’s some debate over whether Apple had a functional product for what it showed it off at its WWDC24 keynote, but the lack of a delivered product still fits the definition).

Last year’s Apple Intelligence showcase was about introducing AI features for their own sake. Writing Tools and summarizations were helpful, but they weren’t anything earth-shattering, and Image Playground and Genmoji were entirely new tools that felt more like a gimmick than anything that Apple users were looking for,

Image Playground and Genmoji felt like the 2024 version of Animoji from the iPhone X era. That was a very fun way to show off what the TrueDepth camera could do, but it was a novelty that wore off pretty quickly. Animoji, and later Memoji, have been a core part of nearly every iPhone released in the past seven years, but when was the last time we saw anybody using it?

However, at least Animoji was a novelty. Apple was showing us something that nobody had done before, and it made the TrueDepth camera seem even more magical. Image Playground wasn’t even novel; AI image generation had already been done — arguably far better — by third-party apps and competing chatbots.

The only new thing Apple brought to the table was its privacy focus. That’s beneficial, but it’s an open question as to how many iPhone users felt it was necessary that prompt-based image generation be private. After all, do I care if some third-party app knows that I’m generating pictures of moose on snowboards?

Apple has tacitly admitted this with iOS 26 et al. The only enhancement to Image Playground this year is new image styles, all of which are powered by ChatGPT rather than extending its own LLMs.

These will likely follow the same privacy rules as Apple’s other ChatGPT integrations, meaning it will be handled anonymously unless you’re signed into a ChatGPT account. However, it still feels like Apple recognizing that it can’t do everything on its own, and there are smaller areas where it’s better to rely on support from its partners.

Core Competencies: A Very Focused Keynote

This year’s WWDC keynote was a return to the pre-pandemic era before Apple was forced to go all-in on virtual presentations. In those days, Apple events were live stage presentations.

By their very nature, those required a greater degree of focus. You can’t do or show everything on a stage, so Apple stuck with its major platform releases, often diverging only to call up some developers to show how third-party apps will be able to take advantage of its latest features and frameworks.

However, much of that went out the window when Apple began prerecording its keynote presentations and other events. Suddenly, the sky was the limit. Apple could show us anything and everything it wanted to. WWDC Keynotes became action-packed events, flying from one presenter to another to cover everything from the usual software updates to new Macs and features for HomePods and AirPods.

To the disappointment of some, this year’s WWDC keynote had none of that. We weren’t expecting any new hardware, but Apple went a step further in mostly ignoring any products that weren’t central to its core operating systems — and even then, the event was about the software that ran on those products; little was said about the devices themselves.

Staying (Mostly) Out of the Home

The most conspicuous example of this was in the “Home” category. At the start of the virtual keynote era, Apple introduced a new segment of its presentation to cover the potpourri of devices it wanted to talk about that didn’t quite fit anywhere else: typically the Apple TV, HomePod, and AirPods.

This was labeled “Home” or “Home & Audio,” depending on how Apple structured it. During this segment, Apple minimized the operating systems (“tvOS” was barely mentioned), focusing more on the device features and how they all worked together.

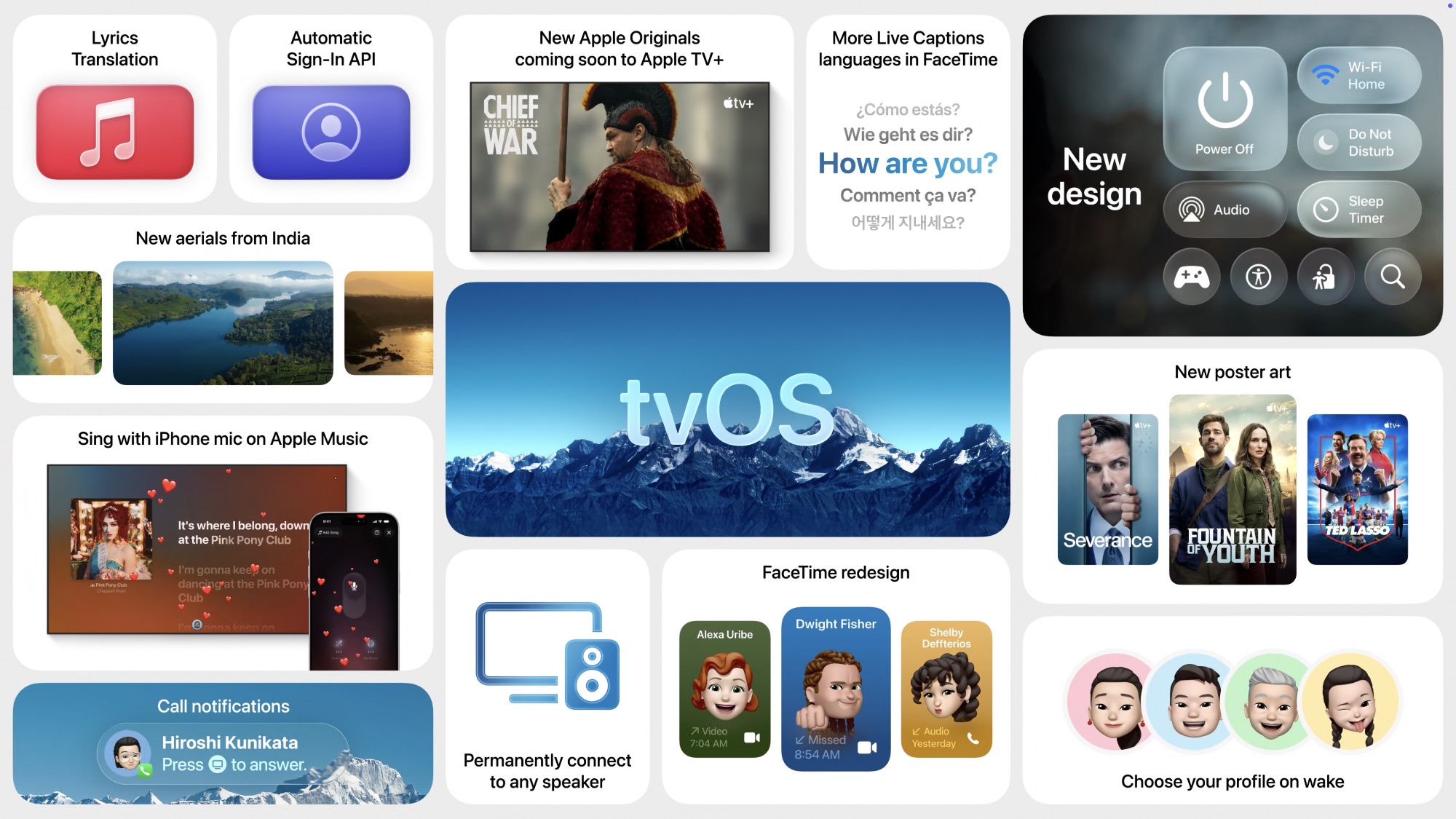

This year, Apple ignored that larger catch-all and focused solely on tvOS as an operating system platform. It was the first year in the virtual keynote era that it showed a “features” slide with tvOS in the center.

Meanwhile, the AirPods were mentioned only in passing during Apple’s iPadOS 26 presentation in the context of how content creators could use them with new iPad features to record studio-quality audio and control video recording. There’s more coming to the AirPods this year, but Apple didn’t feel the need to muddy the waters by showing them off separately from its operating systems.

The biggest and possibly most disappointing omission here was the HomePod. Apple’s omission of a “Home” category was likely more about having nothing it wants to show us yet — Apple’s Siri struggles have supposedly held back the rumored home hub — but it also kept the focus on Apple’s unified operating systems. The HomePod isn’t part of that; it runs an operating system based on tvOS, but Apple treats it as embedded software rather than an OS brand.

Depending on how Apple’s work on Siri goes, we may still see a “homeOS” before next year’s WWDC. Still, it’s an open question whether Apple will create a branded operating system for that or treat the first-generation of its new home hub like the HomePod.

A Word on Liquid Glass…

Before the WWDC keynote, analysts and pundits predicted that Apple’s major redesign would be the highlight of the event, which was certainly true — to the point where the opening presentation by Alan Dye set the theme for the rest of the keynote.

Liquid Glass has become both more and less than what many were expecting. I’ve been using nearly all the new software releases since Tuesday, and while they’ve definitely undergone a noticeable design change, it feels more holistic and evolutionary.

In its current form, Liquid Glass isn’t without its quirks and imperfections, but we’re at the first developer beta, and it’s a safe bet this will be tweaked and refined over the next few weeks.

Apple hasn’t kicked the traditional iOS look to the curb like it did in 2013, when it abandoned skeuomorphism for a flatter and more digital design. Picking up an old iPhone running iOS 6 feels like a trip into the distant past, and it was jarring even in 2014, by which time iOS 7 had become very comfortable.

By contrast, while the differences between iOS 18 and iOS 26 are noticeable, they’re not uncomfortable. It took me only a few hours to adjust to iOS 26 (and iPadOS 26, watchOS 26, and macOS 26), and while there are still a few things that surprise me, the new design already feels smooth and natural. That’s a much faster adaptation curve than when I first installed iOS 7 in 2013.