Sneak Peek: 7 New Accessibility Features Coming in iOS 19

We usually have to wait for Apple’s Worldwide Developers Conference (WWDC) keynote each year to find out about all the goodies in the company’s latest major software releases. However, over the past four years, Apple has provided us with an early glimpse of the accessibility features that will be included in each new update.

These typically occur around four weeks before WWDC, and the timing isn’t coincidental. With Apple’s strong commitment to accessibility, the company highlights these new features to coincide with Global Accessibility Awareness Day (GAAD), which falls on the third Thursday of May each year.

Apple began showcasing its upcoming accessibility features in 2021, the same year the GAAD Foundation was formed. That was the year AssistiveTouch came to the Apple Watch, and AI-based VoiceOver descriptions appeared in iOS 14.

When it made another accessibility announcement in 2022 to reveal Door Detection and Apple Watch Mirroring, it was clear that this was going to become an annual event. Its 2023 announcement included Point and Speak, Live Speech, and Personal Voice, and last year’s iOS 18 release brought over a dozen new accessibility features, including Eye Tracking, Vehicle Motion Cues, Music Haptics, and Vocal Shortcuts.

So, it’s not a big surprise that the company has unveiled another collection of new accessibility features and initiatives, along with some nice enhancements to some of its existing ones. Read on for everything that's coming to Accessibility in iOS 19.

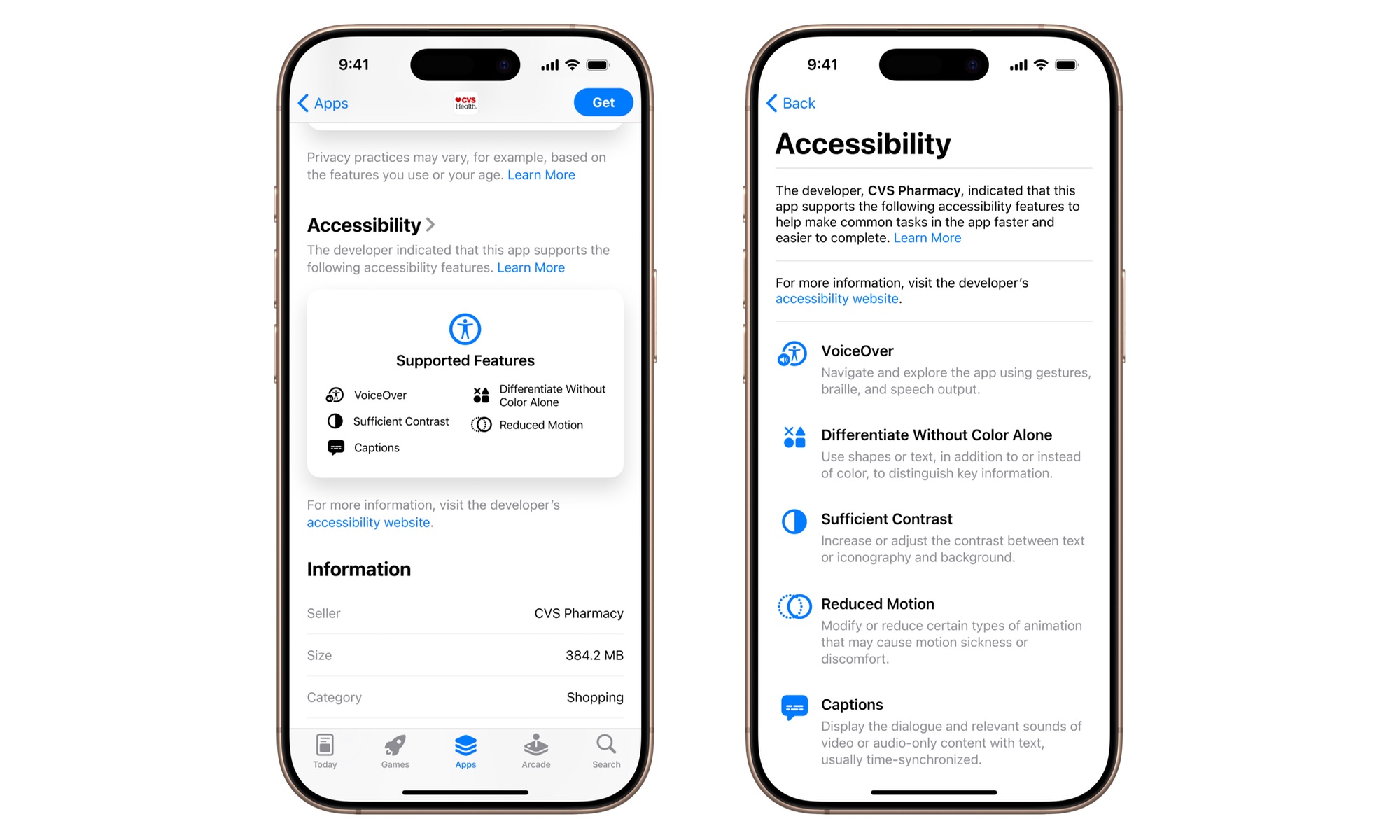

Accessibility Nutrition Labels

Five years ago, Apple introduced Privacy Nutrition Labels to the App Store in iOS 14, helping users determine precisely how much of their personal information they’d need to surrender by downloading and installing a given app.

The concept was to provide a glanceable and easily understood summary, in the same way a nutrition label on a food product shows you the key stats without requiring you to wade through a bunch of text and lists of ingredients.

That turned out to be such a success that other app marketplaces have since adopted a similar technique. Now, Apple plans to expand it to allow users to know whether an app will meet their accessibility needs.

This is a feature that’s even more beneficial than the privacy labels, as it can save a lot of folks the trouble of discovering an app is unusable for them only after they’ve downloaded it.

Accessibility Nutrition Labels will indicate which of Apple’s core accessibility features each app supports, including VoiceOver, Voice Control, Larger Text, Sufficient Contrast, Reduced Motion, captions, and more. They’ll be available on the App Store worldwide when iOS 19 launches later this year, although it will still be up to developers to provide the necessary details so their product pages can display them.

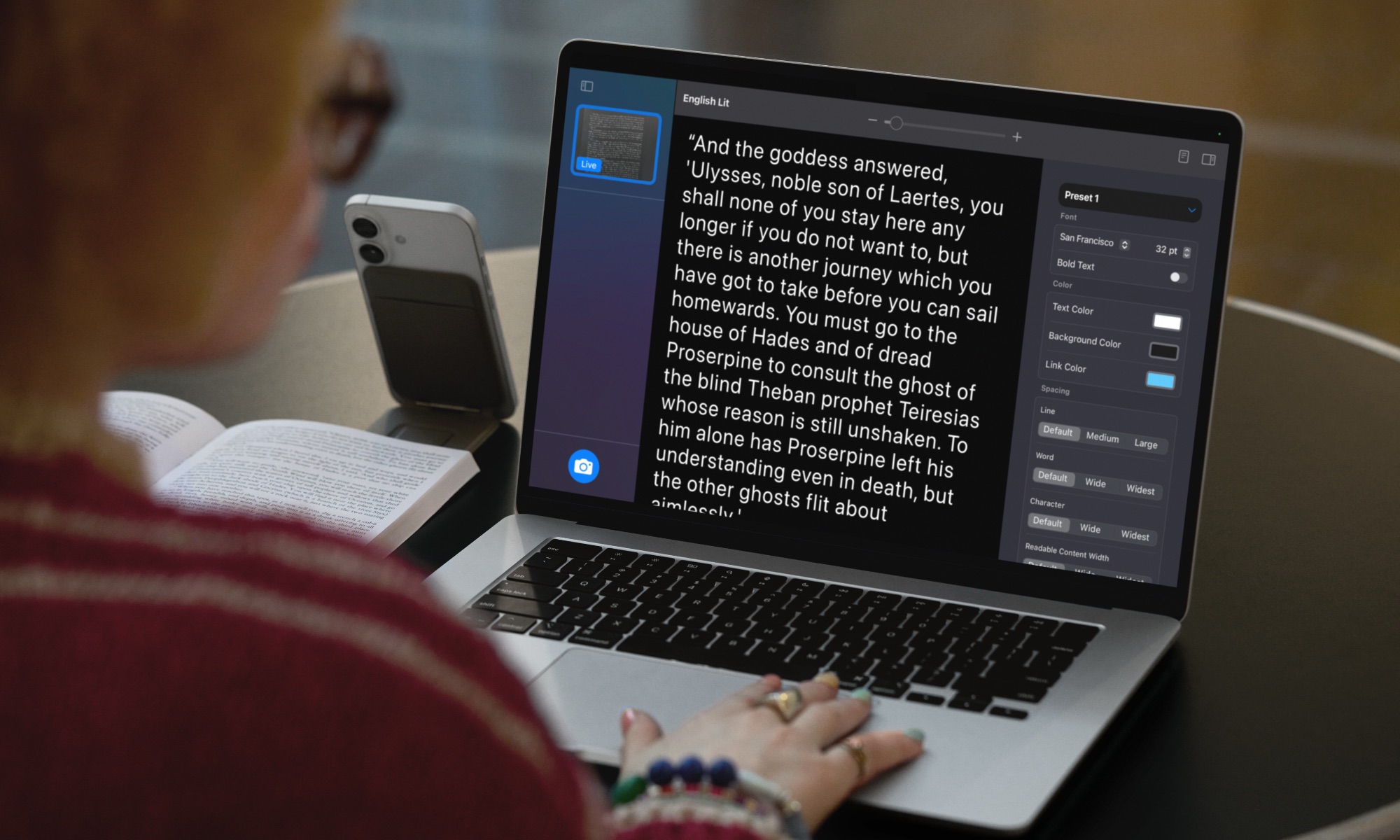

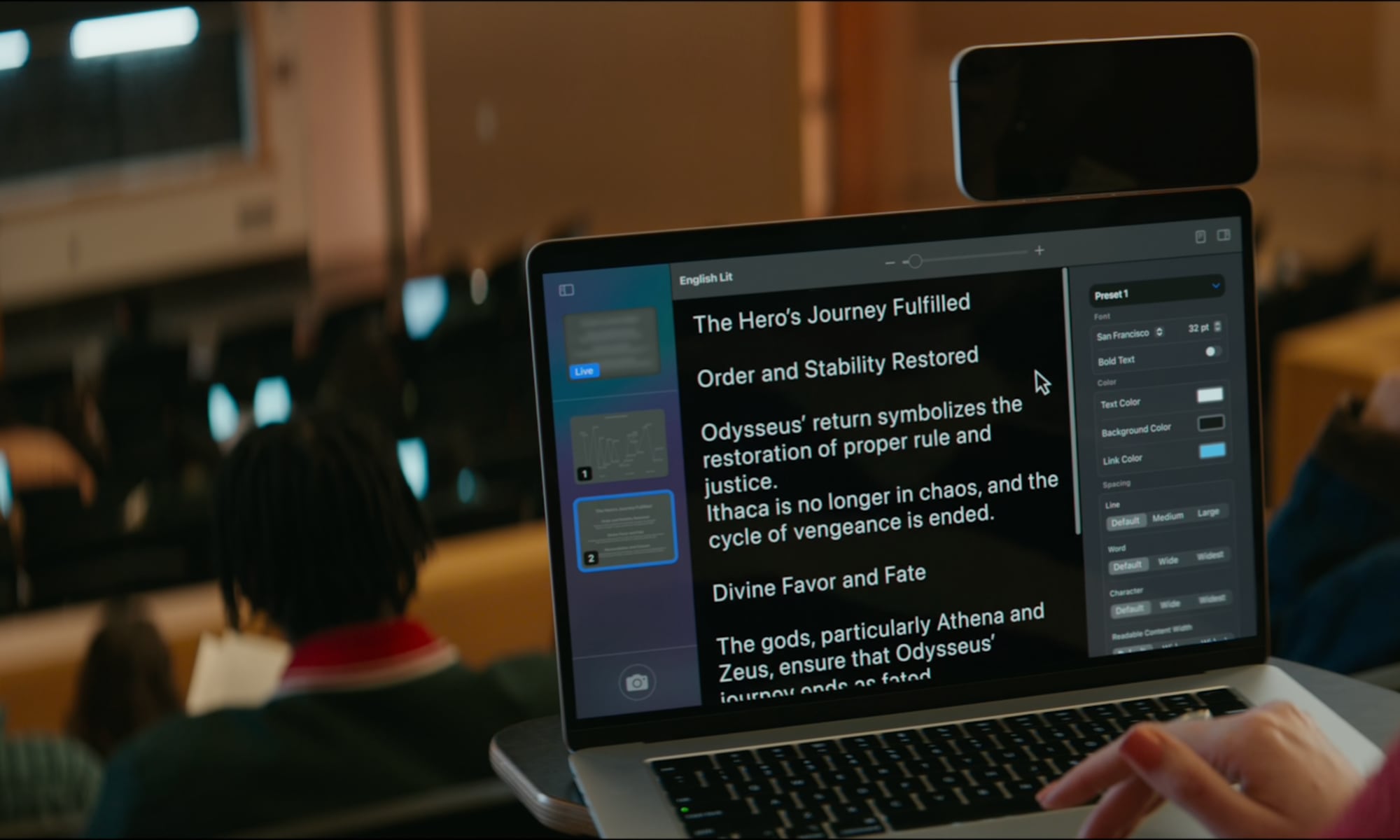

Magnifier for Mac

One of the hidden gems on your iPhone is an accessibility app called Magnifier. It’s been around since 2016, and while it was initially available exclusively in the Accessibility settings, it can now be found as a standalone app on both the iPhone and iPad.

As the name suggests, Magnifier helps folks with low vision use the iPhone camera to zoom in to see fine details or read text, but it’s also handy for anyone who needs a better look at something hard to read, such as the nearly microscopic print that’s often found on small packages and accessories.

With macOS 16, Apple is expanding Magnifier to the Mac, making the physical world even more accessible. While it can be used with the built-in camera on a MacBook, it will also support Continuity Camera on iPhone, including reading documents using Desk View, plus any attached USB camera. Multiple live session windows will let users multitask by viewing a presentation with a webcam while also reading a book using Desk View, and users can adjust brightness and colors to make the text and images easier to see.

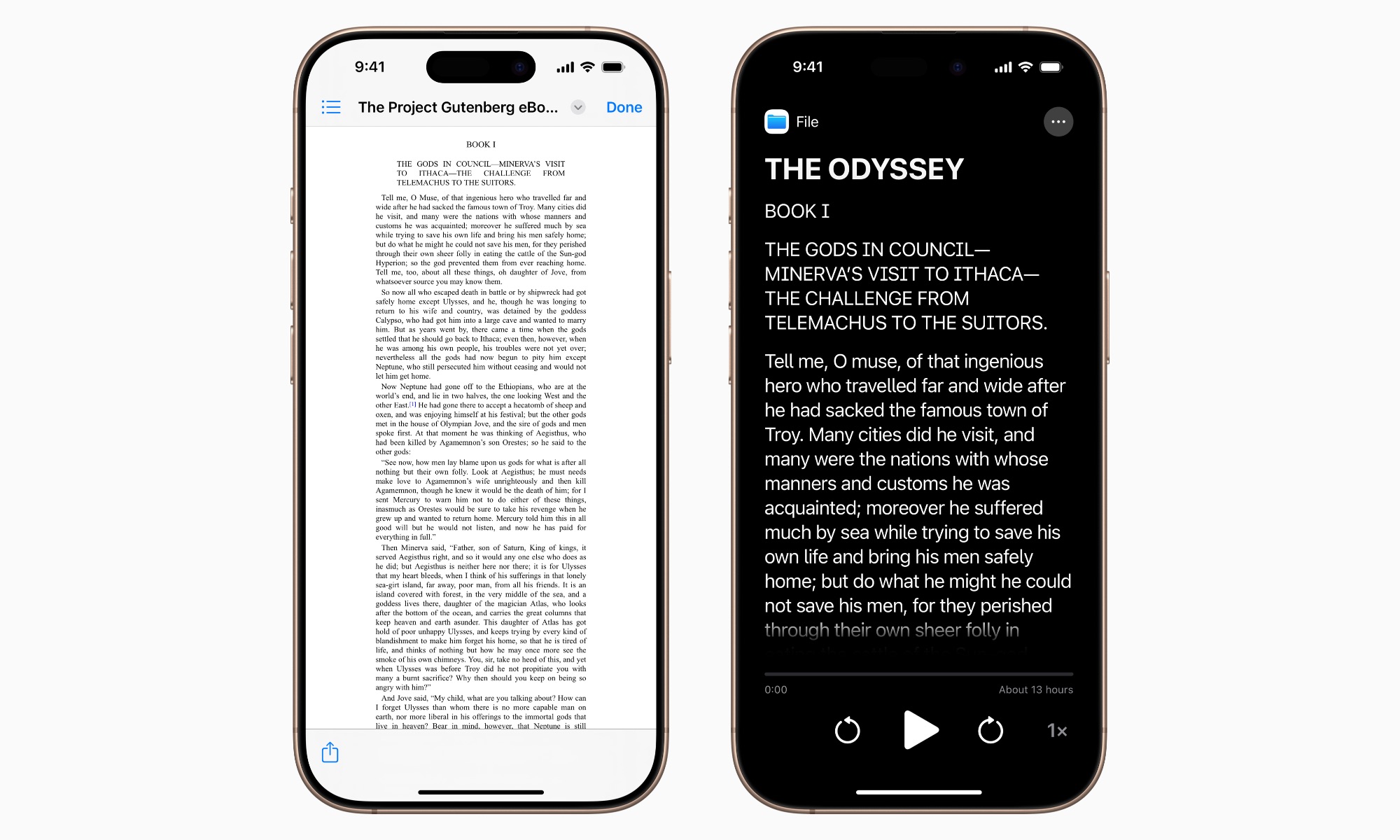

Accessibility Reader

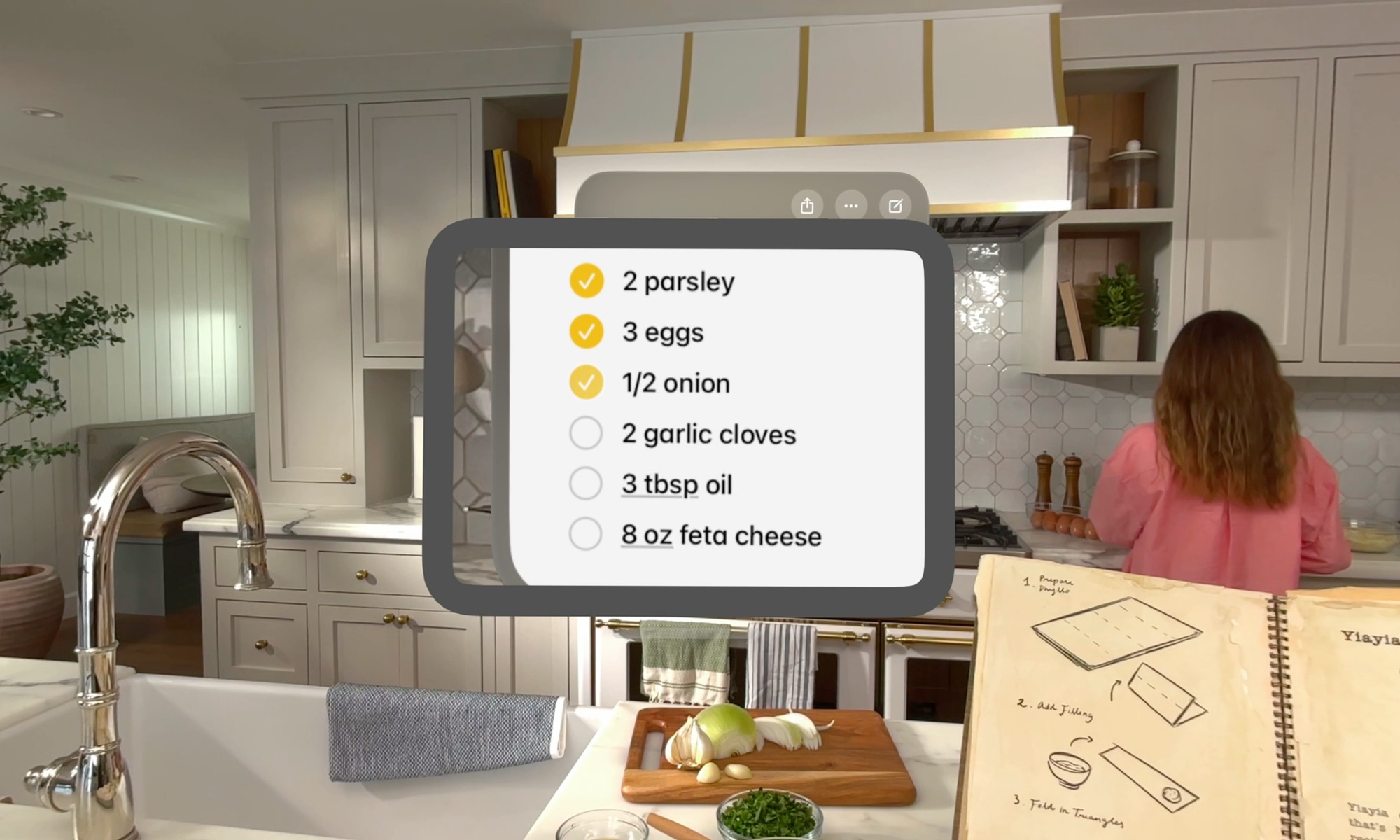

Magnifier will be enhanced even further by a new feature that turns Apple’s Safari Reader mode into a system-wide feature and adds new accessibility enhancements.

Accessibility Reader will let users transform text into a format that’s easier for them to read. This can be text pulled in from Magnifier or digital text from a webpage, book, email, or PDF file. Designed for users with disabilities such as dyslexia or low vision, Accessibility Reader will offer ways to customize font, color, spacing, and more for any block of text fed into it.

The new Accessibility Reader will be available on iPhone, iPad, Mac, and Vision Pro and can be launched from any app. It will also be integrated into the Magnifier app on iPhone, iPad, and Mac, making it even easier to read text from the real world, such as books, magazines, or dining menus.

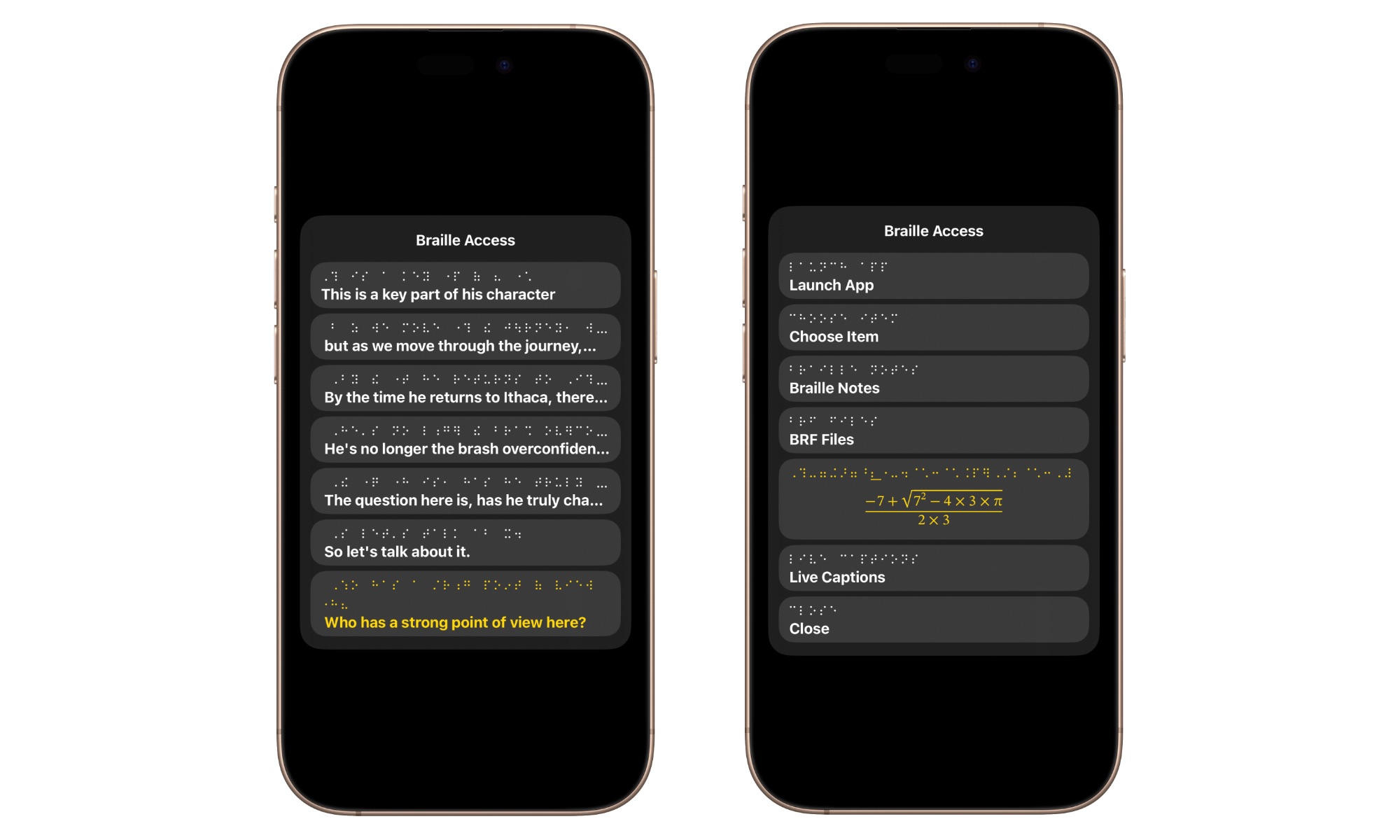

Braille Access

Braille Access will turn the iPhone, iPad, Mac, and Apple Vision Pro into a full-featured braille note taker, effectively adding braille as an input method for launching apps, taking notes, and even performing calculations.

Users can type with Braille Screen Input or a connected braille device and also perform calculations using the popular Nemeth Braille code for math and scientific applications. There’s also support for Braille Ready Format (BRF) files created on braille note-taking devices, plus an integrated form of Live Captions that lets users transcribe conversations in real-time directly on braille displays.

Live Captions on Apple Watch

Live Captions came to the iPhone two years ago in iOS 16, and now Apple is adding them to the Apple Watch. Paired with Live Listen, you’ll be able to use your iPhone as a microphone not just to enhance audio into your AirPods but also to decipher what’s being said by following along with speech-to-text captions on your wrist.

With watchOS 12, the Apple Watch will also serve as a remote control to start or stop a Live Listen session or even jump back to replay something that may have been missed. This will enable individuals who are deaf or hard of hearing to more easily position their iPhone to capture the speaker’s voice, such as during a meeting or classroom lecture, without giving up their ability to control the session.

Zoom and Live Recognition on Vision Pro

As you’re probably aware by now, Apple takes privacy very seriously. The Vision Pro created a whole new set of privacy concerns — imagine having always-on cameras scanning your entire room and tracking where your eyeballs are looking — so Apple made sure that none of this data would be available to third-party apps.

Unfortunately, protecting user privacy often runs contrary to usability, and Apple’s limitations also ruled out apps being able to use the cameras to benefit users with accessibility needs. While Apple still isn’t about to open the floodgates to let developers get unfettered access to the Vision Pro cameras, it’s created a new API in visionOS 3 that will let apps access the main camera to provide live, person-to-person assistance for apps like Be My Eyes, helping users who are blind or have low vision better understand their surroundings hands-free.

Apple is also updating Zoom to let users magnify everything in their field of view and adding Live Recognition in visionOS to provide VoiceOver descriptions. This will let visionOS describe their surroundings, help them find objects in the real world, read documents, and more.

Other Accessibility Feature Improvements

Apple has also announced enhancements to several existing accessibility features:

- Background Sounds will offer new EQ adjustments, the option to stop playing automatically after a set time, and new Shortcuts automation actions.

- Personal Voice is getting better thanks to advances in on-device machine learning, so you’ll end up creating an even more natural voice with even less investment in training. Apple says it will now take less than a minute and can be done using only 10 recorded phases. It’s also adding support for Spanish (Mexico).

- Vehicle Motion Cues is expanding to Mac, helping you avoid motion sickness when you’re using your MacBook on a road trip. Apple is also adding new ways to customize the animated on-screen dots on the iPhone and iPad.

- Eye Tracking on the iPhone and iPad will offer the option to use a switch or dwell to make selections. Additionally, a new keyboard swell timer and Switch Control improvements will make typing and navigation easier and more natural.

- Head Tracking will expand Eye Tracking to let users more easily control iPhone and iPad with head movements.

- Assistive Access will offer a simplified media player in a new custom Apple TV app, and developers can now use the Assistive Access API to create tailored experiences for users with intellectual and developmental disabilities.

- Music Haptics will become more customizable, allowing users to adjust its overall sensitivity and choose whether they want haptics for the entire song or just the vocals.

- Sound Recognition will gain the ability to recognize a user’s name so folks who are deaf or hard of hearing can be alerted when their name is being called.

- Voice Control is expanding to Xcode to support software developers with limited mobility.

- CarPlay Accessibility improvements include Large Text for those with low vision and updates to sound recognition to alert drivers or passengers to the sound of a crying baby in addition to car horns and sirens (which were added in iOS 18 last year).

- Live Captions will be available in more languages, including English (India, Australia, UK, Singapore), Mandarin Chinese (Mainland China), Cantonese (Mainland China, Hong Kong), Spanish (Latin America, Spain), French (France, Canada), Japanese, German (Germany), and Korean.

- Accessibility settings can be quickly shared between devices so you can transfer your custom setup when borrowing a friend’s device or using a public kiosk.

Apple is also laying the groundwork for controlling an iPhone, iPad, or Vision Pro with the power of your mind:

For users with severe mobility disabilities, iOS, iPadOS, and visionOS will add a new protocol to support Switch Control for Brain Computer Interfaces (BCIs), an emerging technology that allows users to control their device without physical movement.

As usual, today’s announcement also kicks off a series of initiatives to celebrate Global Accessibility Awareness Day, with dedicated tables in Apple Retail stores to spotlight accessibility features and featured content in Apple Music, Apple Fitness+, Apple TV+, Apple Books, Apple Podcasts, Apple News, and the App Store.