Apple and Google Are Working to Create the Future of Smartphone Controls

Credit: Tap

Credit: Tap

Toggle Dark Mode

Since Apple released the first iPhone in 2007, smartphones have largely relied on touch inputs and gestures for the majority of the user experience. But that doesn’t mean this will always be the case.

Over the last couple of years, reports have surfaced suggesting that both Apple and Google are working on gesture-based navigation systems that won’t use the touchscreen. And while the end goal is to open up new user interaction possibilities, their exact methods differ.

Google Double-Tap

A new report from this week indicates that Google is working on a new gesture system for its first-party Pixel handsets, essentially letting users double-tap the rear of their smartphones to control various features and system functions.

That new feature, internally codenamed Columbus, was first discovered in Android 11 code by XDA-Developers.

The site says that the double-tap gesture can be used to perform actions such as dismissing timers, launching the camera, invoking Google Assistant, or silencing incoming calls. (Though, XDA notes, users may be able to choose their own actions too.)

Interestingly enough, the double-tap gesture may not just come to future Pixel devices. It doesn’t use any special hardware, just a smartphone’s existing gyroscope and accelerometer. XDA was able to get the system working on both a Pixel 2 and a Pixel 4.

This type of gesture has a high probability of accidental triggers, of course. So to prevent them, Google has also introduced so-called “gates” that will dictate how and when the double-tap gesture actually activates certain features.

Google does have a long history of experimenting with various alternative interaction methods on its first-party smartphones — including Active Edge and Motion Sense.

Apple’s Gesture-Based Navigation

While none of its features have necessarily “taken off,” Google’s experimentation makes Apple’s gesture innovation seem slow at best. But that doesn’t mean Apple isn’t working on similar features in the background.

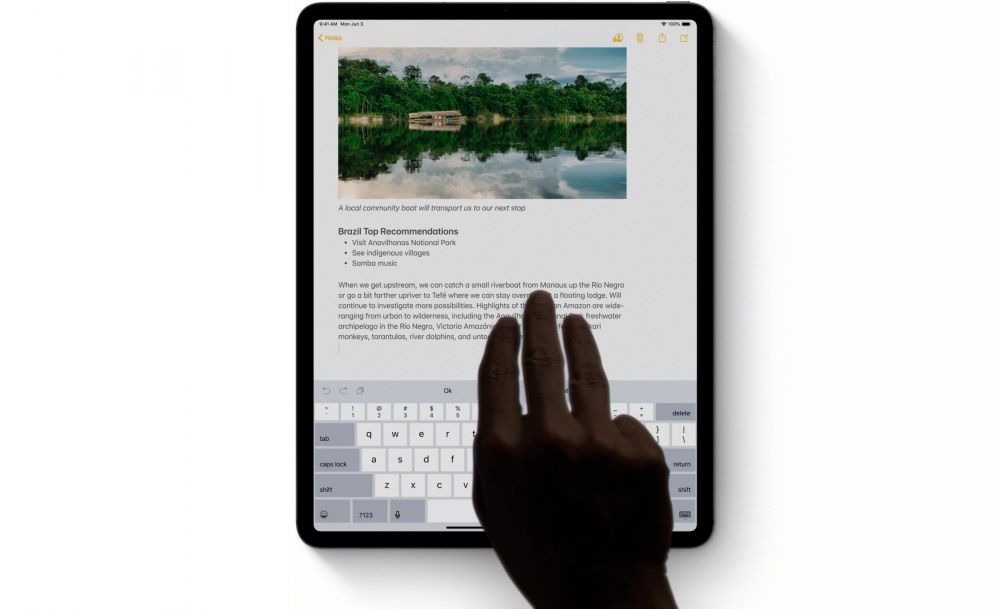

Back in 2018, Bloomberg reported that Apple was experimenting with touchless gestures, allowing users to control various iOS functions with a finger but without actually touching the display.

While vaguely similar to Motion Sense, Apple’s implementation would seemingly be more advanced, with sensors that could detect how close a finger or hand is to a display.

The publication’s timeline also suggested that Apple could release its first in-air gesture iPhone this year. And we haven’t heard much to corroborate that in the time since the Bloomberg report, there are other hints that Apple is continuing to work on gesture-based controls.

A 2017 Apple patent, for example, showed off a similar touchless system that could allow users to control Macs with hand motions. An even more recent patent from this year depicted a new Apple Watch Digital Crown that could sense a user’s hand motions.

While these technologies may be a few years off, Apple is still likely working on them. And with the possibility of an Apple head-mounted AR device looming, it’s likely that development on gesture-based controls will only continue to heat up.