The Latest iOS 26 Beta Adds a Handy New Apple Maps Feature

Toggle Dark Mode

Among the many features coming in iOS 26 are some more subtle but useful improvements to Apple Maps, and this week’s fifth beta appears to have added one more.

With iOS 26, Apple Maps will get better at keeping track of your preferred routes, thanks to on-device machine learning. This isn’t an Apple Intelligence feature, per se, so it should come to every iPhone capable of running this year’s software release, which are the 2019 iPhone 11 and later models.

The preferred routes feature has been up and running since the first developer beta, although it’s naturally gotten a bit more polished in recent betas. It works directly on the iPhone and via CarPlay, and will suggest the best route to your destination based on your habits, even factoring in stops you might like to make along the way, such as hitting your favorite coffee shop on the way to work.

There’s also a Visited Places feature that will help you keep track of where you’ve been. Apple has tracked locations you regularly visit behind the scenes for years to help inform suggestions in Apple Maps, as well as other apps like Calendar, Photos, and Reminders. Since Apple isn’t in the data mining business, this information is stored solely to help you, not Apple or any other third parties. It’s always been 100% private and stored only on your device — and it’s always been easy to turn off if it’s something you’d rather not have your device collecting. However, it’s also been somewhat buried, exposed more for transparency than as an actual feature.

With iOS 26, Apple is bringing this information directly into Apple Maps so you can more easily browse, search, and manage the list of places you’ve visited. As before, it’s all stored solely on your device and protected by end-to-end encryption. You can still turn it off if you don’t want to use it, and individual locations can be removed with just a swipe.

What’s New in Apple Maps in Beta 5

Apple highlighted Preferred Routes and Visited Places during the iOS 26 presentation at its Worldwide Developers Conference (WWDC) in June. However, it looks like the company has snuck a new feature into the most recent iOS 26 beta that we didn’t see coming: natural language search.

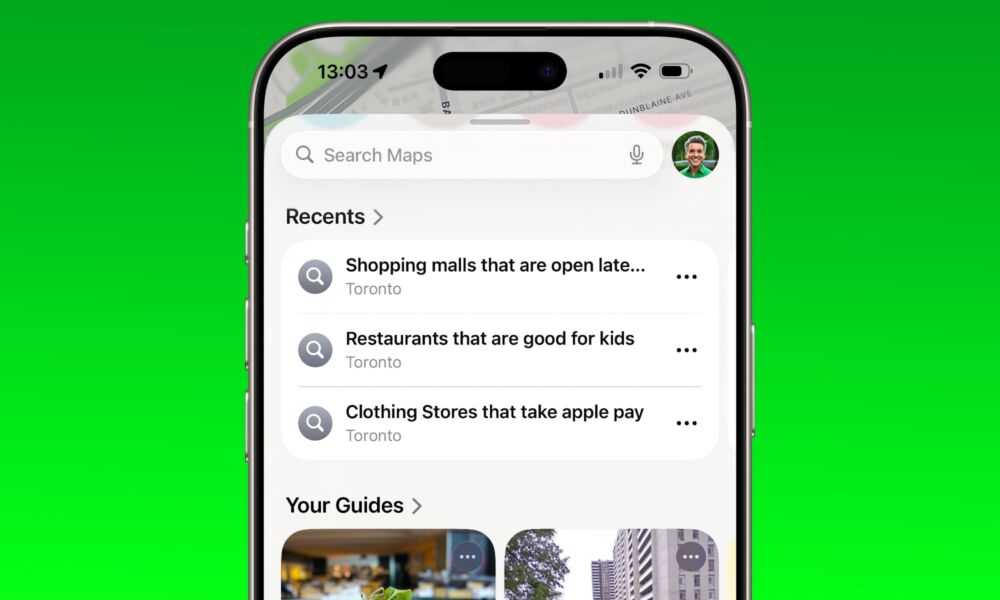

First discovered by 9to5Mac, we’ve confirmed that Apple Maps in iOS 26 beta 5 now provides results for all manner of natural language searches. The example Apple provides is “find cafes with free Wi-Fi,” but you can concoct nearly anything else you can think of, such as “Shopping malls that are open late on Sunday” or “Restaurants that are good for kids.”

These natural language searches also trigger many of the built-in filters that are already present in Apple Maps, letting you easily refine the search further. For example, a search for “Clothing stores that take Apple Pay” will show results with the “Accepts ?Pay” button toggled on. You could then choose additional options such as “Open Now.”

Searches can combine amenities, type of venue, and other factors such as a starting location, which means you can effectively find things near points of interest without having to navigate there first. For example, “Coffee shops in downtown New York with free Wi-Fi.” Key landmarks also work in some cases, but not all. For instance, Ryan Christoffel at 9to5Mac pointed out how Disney World worked as a starting point, but I wasn’t able to replicate that from Toronto, and the same sort of query with other venues like the CN Tower, Empire State Building, or Yankee Stadium similarly failed to produce the desired results. Of course, it’s still in beta, as evidenced by the fact that sometimes the same query didn’t even work twice. Apple will undoubtedly flesh this out as we get closer to release, and even beyond, as it’s done with its other natural search features.

While it’s possible this may require an iPhone that supports Apple Intelligence, as it seems to mirror the natural language search features introduced in other apps like Photos. However, since it’s also based on publicly available information rather than personal data, it’s more likely to be processed on Apple’s servers, which means they’ll be doing the heavy lifting. This is how natural language search in Apple Music, Apple TV, and the App Store works, so Apple Maps could work the same way.

On-device processing would only be necessary if the natural language search incorporated user-specific data, like the aforementioned Visited Places feature, and it’s telling that a query like “coffee shops I’ve visited recently” returns no results.