iOS 13 Beta 3 Is out with the Magical Ability to Fix Eye Contact During FaceTime Calls

Credit: Rocketclips / Shutterstock

Credit: Rocketclips / Shutterstock

Toggle Dark Mode

Apple has just released the third beta of iOS 13 to registered developers, coming in at the expected two-week interval after the second beta was released on June 17, and four weeks after making the original developer preview available at its annual Worldwide Developers Conference.

Early developer betas of major iOS releases often provide some significant new features, and the second beta didn’t disappoint in this regard, adding some of the things that Apple promised on stage that simply didn’t make it into the first beta, along with a few additional surprises. As the iOS 13 betas mature, we should expect to see more polishing, bug fixes, and performance improvements, although the third beta still has some additional treats in store as well.

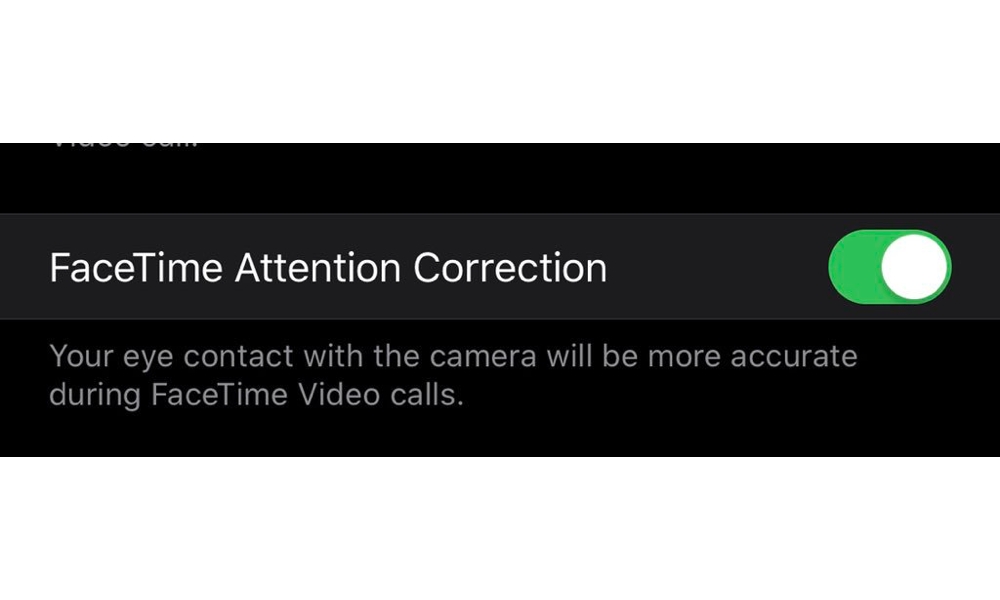

FaceTime Attention Correction

Perhaps the most significant new features in the third beta is something called FaceTime Attention Correction. First spotted by Twitter user Kieran R, a new setting that can be toggled on suggests that Apple will be using some special magic to make users on FaceTime look like they’re making proper eye contact with the camera, as opposed to looking down at the screen.

Following the release of the new iOS 13 beta, a number of developers began testing it out and tweeting out examples of the feature at work, revealing how much of a difference it makes to the FaceTime video chat experience.

According to 9to5Mac, this was something predicted by Mike Rundle back in 2017 as a possible feature for a 20th Anniversary “iPhone XX”; Rundle includes a huge 30-page list of other predictions, most of which were extreme future tech, so needless to say he said he was “pretty astounded” to see it already.

An analysis by Dave Shukin reveals that the new feature is actually using Apple’s latest ARKit frameworks, which are used to grab a mapping of the user’s face and then simply adjust the eyes accordingly — probably not a huge technological stretch considering what Apple can already do with Face ID, Animoji, and the Attention Aware Features that have been available since the TrueDepth camera was first introduced on the iPhone X back in 2017.

That said, however, this particular feature seems to require a bit more horsepower, with reports indicating that it’s only available on the iPhone XS, iPhone XS Max and iPhone XR, and not the iPhone X, since it relies on the ARKit 3 APIs that require the A12 Bionic chip only found in those models.

When Will We See a Public Beta?

In previous years, Apple’s public betas have come only a day or two on the heels of the corresponding developer betas, but with the first iOS 13 public beta only arriving last week it seems like Apple may be staggering them a bit more this time around; despite being released a week apart, the second developer beta and first public beta were the exact same build of iOS 13 (17A5508m), so the delay in releasing the public beta wasn’t the result of Apple providing a new version to public beta testers, and it seems likely that the next public beta will also be the same built that’s just been sent out to Apple’s developers.

So it’s unclear at this point if Apple plans to continue this practice, in which case we won’t likely see a public beta until next week, or move back to the tighter release schedule of prior years, which could result in the second public beta arriving as soon as today.