What Will Apple’s AR Glasses Actually Do?

Credit: iDrop News

Credit: iDrop News

Toggle Dark Mode

After spending a decade transforming the smartphone industry and inventing the tablet market, Apple has been expanding its horizons for the next big transformative technologies. Rumours have abounded for years about a “secret-but-not-so-secret” car project that will likely arrive by 2025, and Apple has clearly shown more than a passing interest in augmented reality and virtual reality applications, with most expecting that we’ll see an actual AR headset from the company within the next two years.

Like most of Apple’s secretive projects, however it remains to be seen exactly what form that’s going to take. Rumours have ranged from Apple creating a full VR headset like the Oculus Rift to something more suited for everyday use, like Google’s Glass project. Leaked reports have also suggested that they would be powered by a new mobile operating system, “rOS” and be a completely standalone device, rather than relying on a paired iPhone to be nearby.

Although a lot of rumours have talked about how such a headset might be put together, at the end of the day Apple — and its customers — are more likely to care about how it works and what it’s capable of than technical spec sheets.

Apple’s approach to Augmented Reality so far

Apple took its first foray into Augmented Reality with the release of iOS 11 back in 2017, when it introduced a new framework called ARKit. While app developers had toyed with Augmented Reality applications for years before that — Yelp famously introduced an early AR feature known as “Monocle” to its local search app back in 2009 — this was the first attempt by Apple itself to promote the technology and encourage more developers to jump on board by providing a unified framework.

Apple’s strategy bore fruit pretty quickly, and it wasn’t long before everything from furniture shopping apps to games were taking advantaging of Apple’s new ARKit framework to implement their own augmented reality features, which became even more sophisticated by being tightly integrated into the iPhone core operating system and sensors. For example, the popular game Pokemon Go, which had incorporated its own AR features prior to Apple’s announcement, got a boost in accuracy and performance as a result of being able to plug into ARKit.

A year later, with iOS 12, Apple seriously upped the game with ARKit 2, which brought major improvements to live image detection and tracking, as well as laying what many believed was the foundation for Apple’s AR glasses, since being able to accurately place and track objects in a real-world environment is critical to wearable AI solutions. The accuracy also got a boost, and Apple even highlighted this with its own “Measure” app in iOS 12, which could use the ARKit 2 features to make accurate measurements of real-world objects through the iPhone camera.

What this means for Apple’s AR Glasses

While every app developer has of course been able to take their own approach to creating augmented reality experiences for their users, the ARKit 2 features that Apple has been focusing on seem to lend themselves far more to creating an everyday device that’s designed to overlay the real world and provide practical applications rather than something that’s focused specifically on gaming or niche technical uses.

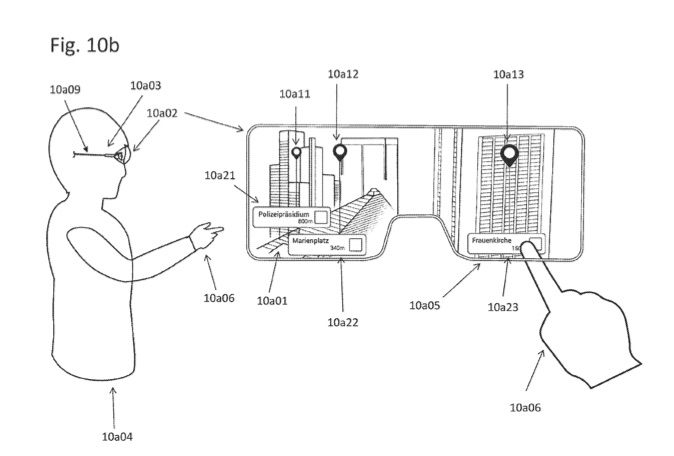

In fact, a new patent recently discovered by AppleInsider provides some more insight into Apple’s thinking. The patent, which Apple applied for back in 2017, describes a “method and mobile device for display points of interest in a view of a real environment displayed on a screen of the mobile device.” This is basically patent-lingo for a typical augmented reality scenario where indicators are overlaid on a view of the real-world. The patent also mentions a “head-mounted display,” making it clear that in this case Apple is focusing on applying the technology to its AR glasses, rather than simply extending what ARKit can already do on the iPhone and iPad.

The illustration that accompany the patent show a head-mounted display that’s able to identify and label buildings, while another image shows how an accompanying iPhone could be used to display additional details on specific points of interest.

Even more interesting is that the patent suggests that the AR glasses could be used not just on a macro level in larger spaces, but could also help users find objects around their home or office. For example, Apple could extend its “Find My AirPods” feature to actually show where a lost pair of AirPods is located in the form of an overlay, guiding the user directly to them.

With ARKit 2 already able to handle real-world object detection, it’s easy to see how this could be extended to helping point users to other visible items such as keys, or quickly identify the items that are visible in a given space. With iOS 12, Apple has also already demonstrated the ability to take measurements of objects, so determining room dimensions or the distance between spaces would also be a possibility.

Another illustration in the patent shows AR glasses identifying key items of a car’s interior dashboard, which could help users familiarize themselves with new vehicles more quickly, and could especially lend itself to car sharing services, where users may frequently drive different models of cars.

So what will the Apple Glasses look like?

While the illustrations in the patent show a head-mounted display that looks like a pair of ski goggles, it’s important to understand that patent illustrations in cases like this are simply to demonstrate a concept, and often bear little resemblance to what a finished product would look like.

There are still a few clues, however. For instance, the patent refers to the use of a “semi-transparent display” which suggests that Apple’s AR headset would be more akin to a pair of normal eyeglasses than a headset with an integrated display and camera. This would also certainly appear to be more in keeping with Apple’s design ethos, especially if the company is attempting to create a product for everyday use.

As with most Apple patents, however, the mere existence of a patent application doesn’t necessary translate into a real-world product. However, with mounting evidence that Apple is actively working on a set of AR glasses, and knowing Apple’s sense of style, we still think it’s a pretty safe bet that these are going to look like mainstream eyeglasses rather than a Robocop visor.

As for when we’re going to see them? Of course nobody knows for sure, but Apple has definitely been getting serious about augmented reality, leading many analysts suggesting that a first-generation version could arrive as early as next year, or by 2021 at the latest — assuming of course that Apple stays on track and doesn’t run into any engineering snags.

[The information provided in this article has NOT been confirmed by Apple and may be speculation. Provided details may not be factual. Take all rumors, tech or otherwise, with a grain of salt.]