The ‘Babel Fish’ Feature for AirPods May Be Coming After All

Toggle Dark Mode

Earlier this year, we heard reports that Apple had ambitious plans to turn its AirPods into a Star Trek-style Universal Translator, allowing real-time translation of live speech. When Apple unveiled iOS 26 in June, it showed off a new AI-powered Live Translation feature that could translate phone calls and caption FaceTime video calls in real-time, but there was no mention of anything like this coming to the AirPods.

Unlike previous years, the AirPods didn’t get any real stage time during this year’s Worldwide Developers Conference (WWDC) keynote. In the past, Apple had bundled new AirPods features into a “Home & Audio” segment of the presentation, but that segment was missing this year, with Apple choosing to focus solely on tvOS 26 for the Apple TV instead.

Nevertheless, Apple touched on several new AirPods features coming later this year, including higher-quality audio recording and the ability to use the stem button as an iPhone camera remote. The feature slides shown at the end of each operating system segment also revealed a new sleep detection feature that would automatically pause music and better support for handling automatic switching of active audio when moving to CarPlay or AirPlay speakers.

All these features appeared in the early iOS 26 betas and accompanying AirPods firmware updates, but the “universal translator” (or “babel fish” for Douglas Adams fans) was nowhere to be seen. Some believed that the sources who told Bloomberg’s Mark Gurman about the feature in March may have conflated the Live Translation with something coming to the AirPods.

However, the other very legitimate possibility is that Apple was still working on it. That would be an especially valid theory this year, considering that Apple, having been stung by overpromising Siri improvements during last year’s iOS 18 announcement, has been very careful to avoid showing off anything that’s not coming in the initial iOS 26.0 release.

That’s a significant departure from the last several years of WWDC keynotes, when Apple effectively laid out an entire roadmap for the entire release year of iOS updates. For example, in 2017, Apple showed off Messages in iCloud and AirPlay 2 during its iOS 11 unveil, despite those not showing up until iOS 11.3 and iOS 11.4 the following spring.

Since Apple is being far more cautious this year, there could be many new features coming in iOS 26.1, iOS 26.2, and beyond that we simply don’t know about yet. Images found buried in the latest iOS 26 beta suggest that AirPods Live Translation is one of those features.

Multiple sources, including 9to5Mac and MacRumors, have independently unearthed an image from the Translate app in iOS 26 beta 6 that shows a set of AirPods Pro surrounded by words in several different languages.

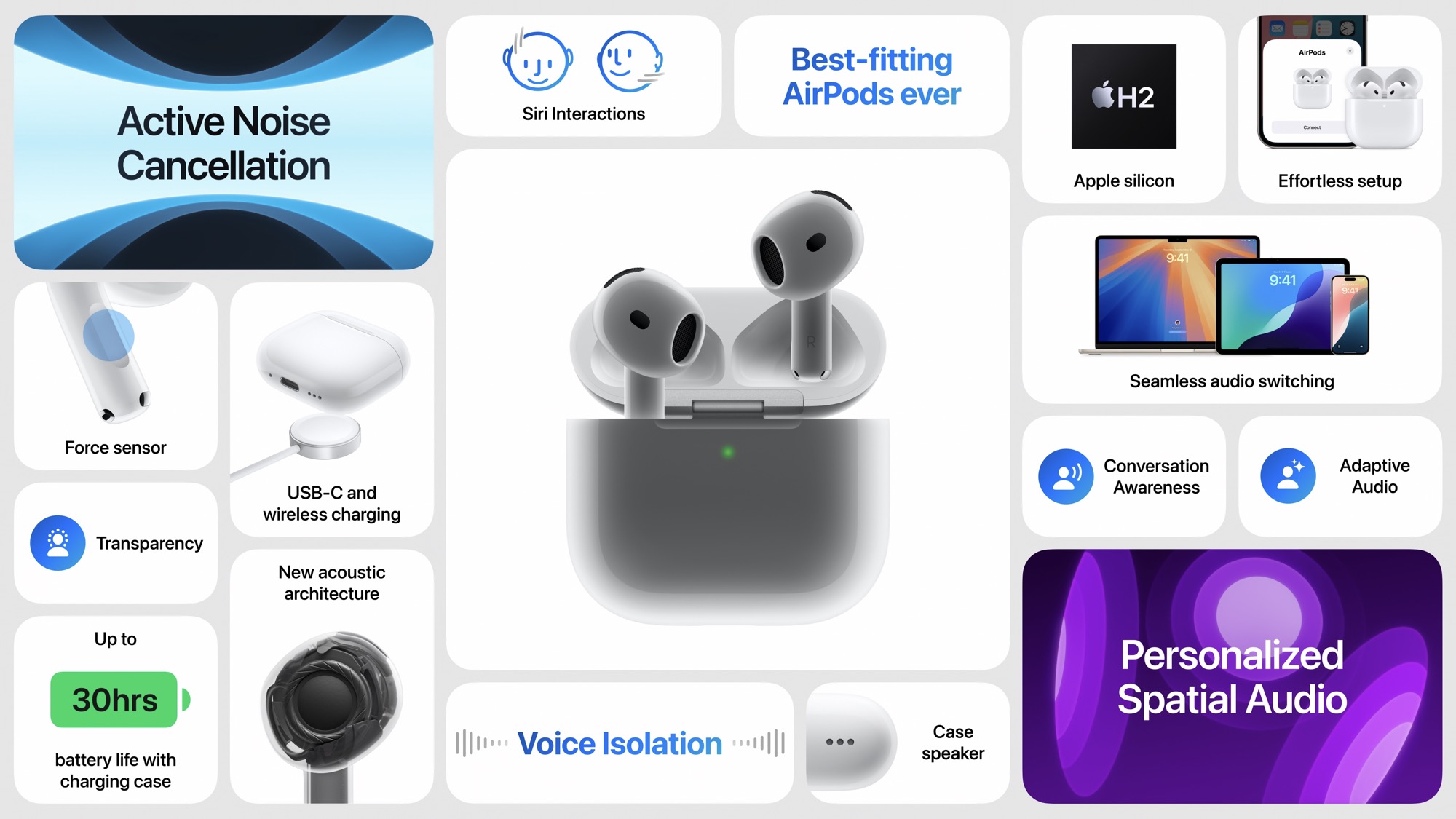

The implication is clear, especially considering where this image was found. The Translation app would be the likely place for an AirPods Live Translation feature. The image also appears to be associated specifically with the AirPods Pro 2 and AirPods 4, suggesting it’s coming to both those models, likely as those are the only ones that feature Apple’s newest H2 chip.

Little else is known about what shape this feature will take, other than the rumors we’ve already heard. “If an English speaker is hearing someone talk in Spanish, the iPhone will translate the speech and relay it to the user’s AirPods in English,” Bloomberg’s Mark Gurman said in March, adding that “The English speaker’s words, meanwhile, will be translated into Spanish and played back by the iPhone.”

Despite only running on the most modern AirPods, there’s little doubt that an iPhone will be required to do the heavy lifting. If anything, the H2 chip is more about reduced latency — something that would be crucial for real-time translation — but since the Live Translation feature in iOS 26 will only be available on an iPhone supporting Apple Intelligence, it’s a safe bet that the same requirement will apply to the AirPods “Universal Translator.”

There’s also the possibility that this could require even more processing power, making it exclusive to the A19-equipped iPhone 17 models. This could explain why Apple didn’t mention it at WWDC, as it typically waits until its fall iPhone event to show off iOS features that are exclusive to new iPhone models.

On the flip side, Apple frequently includes code and images for new features several versions before they roll out. The stuff we’re seeing in iOS 26 beta 6 could just as easily be in preparation for the feature to debut in iOS 26.1 or even a later update.

[The information provided in this article has NOT been confirmed by Apple and may be speculation. Provided details may not be factual. Take all rumors, tech or otherwise, with a grain of salt.]