Tech Leaders Raise Concern About the Dangers of AI

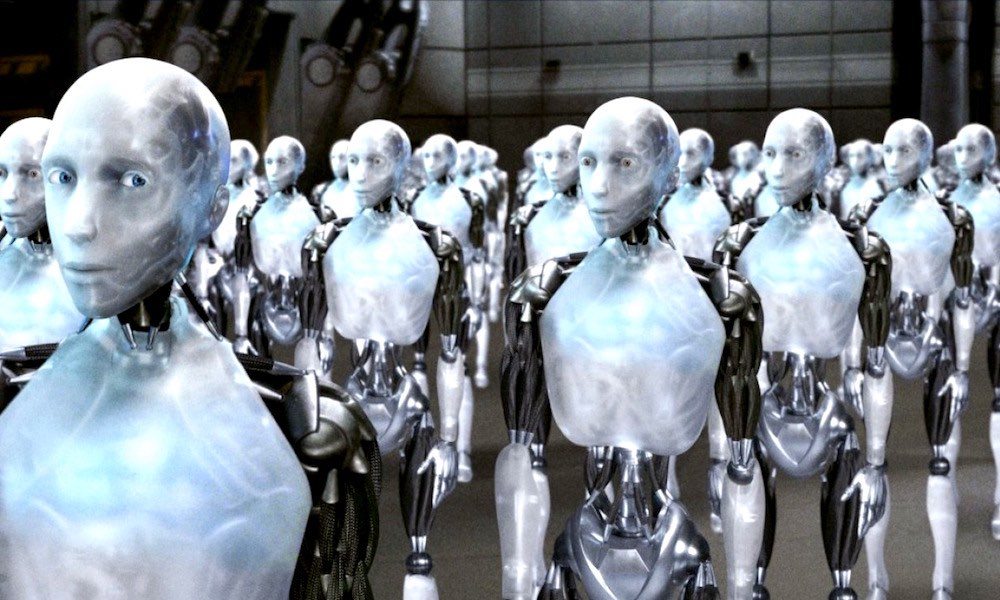

Image: 20th Century Fox, iRobot

Toggle Dark Mode

In the midst of great strides being made in artificial intelligence, there’s a growing group of people who have expressed concern about the potential repercussions of AI technology.

Members of that group include Tesla and SpaceX CEO Elon Musk, theoretical physicist and cosmologist Stephen Hawking, and Microsoft co-founder Bill Gates. “I am in the camp that is concerned about super intelligence,” Gates in a 2015 Reddit AMA, adding that he doesn’t “understand why some people are not concerned.” Additionally, Gates has even proposed taxing robots that take jobs away from human workers.

Musk, for his part, was a bit more dramatic in painting AI as a potential existential threat to humanity: “We need to be super careful with AI. Potentially more dangerous than nukes,” Musk tweeted, adding that “Superintelligence: Paths, Dangers, Strategies” by philosopher Nick Bostrom was “worth reading.”

Hawking was similarly foreboding in an interview with the BBC, stating that he thinks the “development of full artificial intelligence could spell the end of the human race.” Specifically, he said that advanced AI could take become self-reliant and redesign itself at an “ever-increasing rate.” Human beings, limited by biological evolution wouldn’t be able to keep up, he added.

Indeed, advances in artificial intelligence — once seen as something purely in the realm of science fiction — is more of an inevitability than a possibility now. Tech companies everywhere are seemingly in a race to development more advanced artificial intelligence and machine learning systems. Apple, for example, is reportedly doubling-down on its Seattle-based AI research hub, and also recently joined the Partnership on AI, a research group dominated by other tech giants such as Amazon, Facebook and Google.

Like every advance in technology, AI has the potential to make amazing things possible and our lives easier. But ever since humanity first began exploring the concept of advanced machine learning, the idea has also been closely linked to the trope of AI being a potential threat or menace. SkyNet from the “Terminator” series comes to mind. Even less apocalyptic fiction, like “2001: A Space Odyssey,” paints AI as something potentially dangerous.

As Forbes contributor R.L. Adams writes, there’s little that could be done to stop a malevolent AI once it’s unleashed. True autonomy, as he points out, is like free will — and someone, man or machine, will eventually have to determine right from wrong. Perhaps even more worryingly, Adams also brings up the fact that AI could even be weaponized to wreak untold havoc.

But even without resorting to fear-mongering, it might be smart to at least be concerned. If some of the greatest minds in tech are worried about AI’s potential as a threat, then why aren’t the rest of us? The development of advanced artificial intelligences definitely brings about some complicated moral and philosophical issues, even beyond humanity’s eventual end. In any case, whether or not AI will cause humankind’s extinction, it doesn’t seem likely that humanity’s endeavors in the area will slow down anytime soon.