Personalized Spatial Audio in iOS 16 Will Revolutionize Your Listening Experience | Here’s How It Works

Credit: Hadrian / Shutterstock

Credit: Hadrian / Shutterstock

Toggle Dark Mode

As great as Apple Music’s Spatial Audio with Dolby Atmos already is, Apple has figured out a way to use the power of its TrueDepth camera and Neural Engine to make it even better.

Coming in iOS 16, Personalized Spatial Audio will tailor the Spatial Audio experience for each user by letting them create a unique Spatial Audio profile explicitly designed for the shape of their head and ears.

Unlike the broader Spatial Audio with Dolby Atmos feature, which works with a wide range of wired and wireless headphones, Personalized Spatial Audio will require a compatible set of AirPods. Right now, that’s the AirPods Max, AirPods Pro, and AirPods 3, although this will presumably include any new AirPods that Apple releases this year and beyond. The configuration screens suggest that some Beats headphones will also be supported, although Apple hasn’t yet said which ones.

You’ll also need an iPhone with a TrueDepth camera since Apple’s machine learning algorithms use that to scan your head and ears. Note that this feature is specific to iOS 16; it’s not found in iPadOS 16, at least not in these early betas, so it won’t work on an iPad Pro, even though most of those also sport TrueDepth cameras.

Personalized Spatial Audio enables an even more precise and immersive listening experience. Listeners can use the TrueDepth camera on iPhone to create a personal profile for Spatial Audio that delivers a listening experience tuned just for them.Apple

How It Works

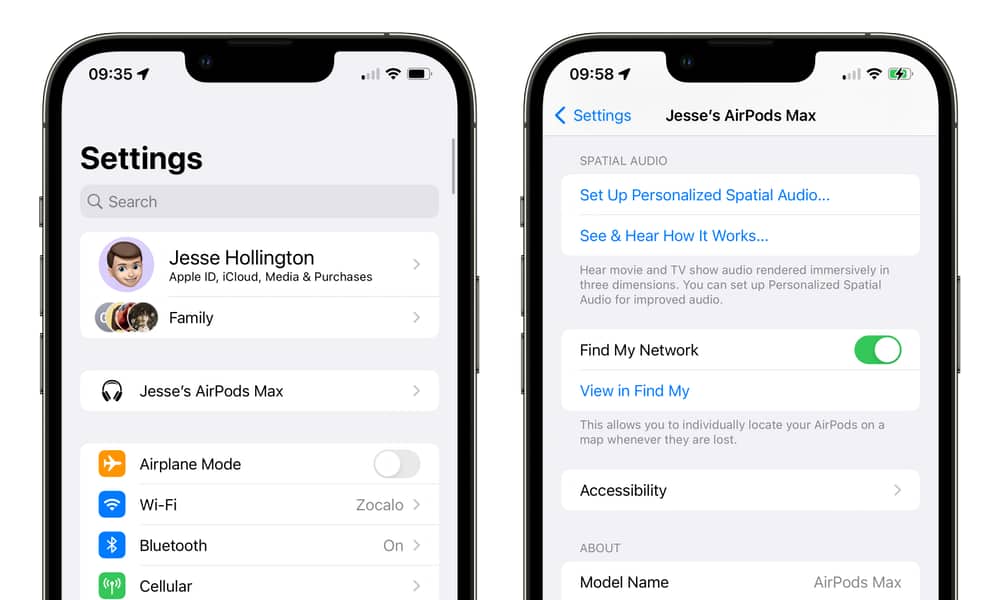

When you first connect a compatible set of AirPods to iOS 16, you should see a pop-up welcome screen prompting you to set up Personalized Spatial Audio. However, if this doesn’t show up, or if you dismiss it without going through the setup process, you can find it in your iPhone’s Settings app.

As an aside, Apple has also made it much easier to get at your AirPods’ settings in iOS 16. Whenever a set of AirPods are connected to your iPhone, they’ll appear at the top of the Settings app, directly between the header that includes your name and account settings and the Airplane Mode switch.

Tapping this will take you to the same AirPods settings screen previously buried in the Bluetooth or About sections.

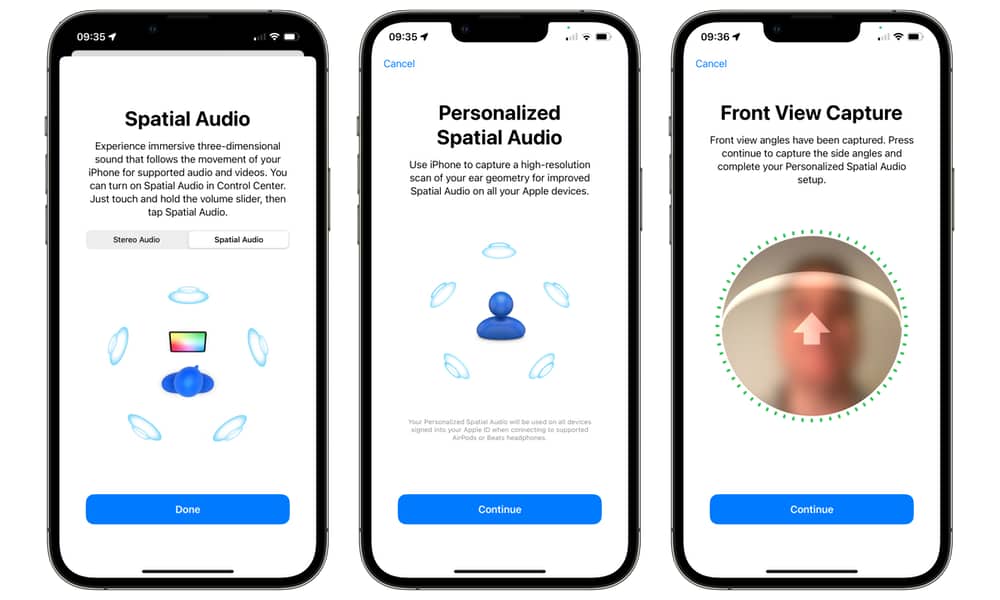

In iOS 16, a new Set Up Personalized Spatial Audio… button will start the process of teaching your iPhone about the shape of your head and your ear geometry. The TrueDepth camera will take high-resolution scans from multiple angles and use that with new AI algorithms to dynamically adjust the Spatial Audio experience just for you.

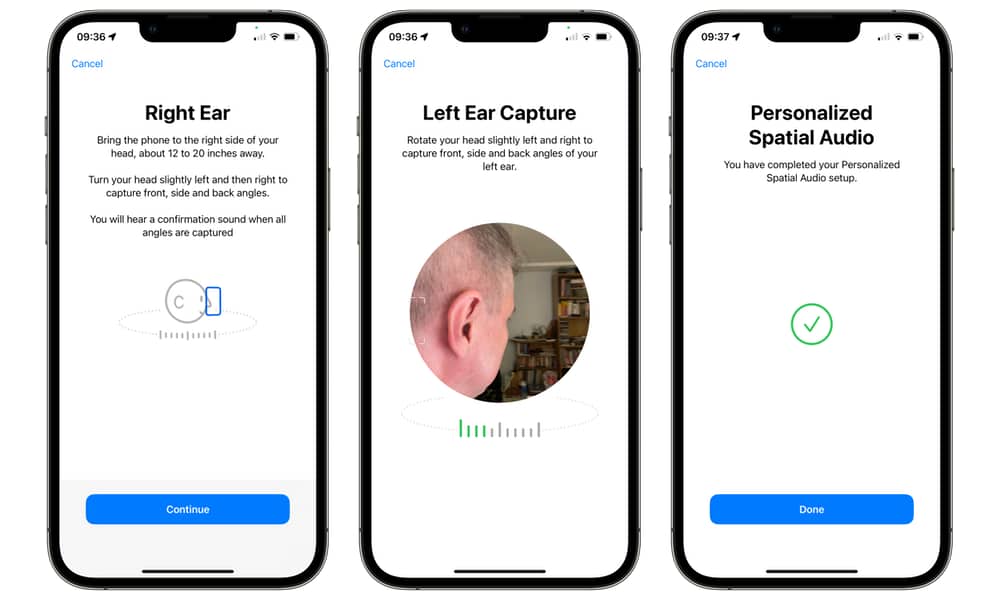

The process begins with a Face ID style capture of the front of your head, but after that, you’ll have to hold your iPhone to each side of your head so that it can get a peek at what each of your ears looks like.

You’ll have to turn your head around while doing this, just as you do for a front Face ID scan, so your iPhone can capture multiple angles of your ears. It’s a slightly weird experience, as you can’t look at the screen while doing it, so you’ll have to rely on audible confirmation tones. One tone lets you know when it’s time to move your head, while another tone will confirm that the capture is complete. I’m a bit surprised Apple didn’t go with voice prompts here, although that may change by the time iOS 16 is released.

Once that’s all done, you’re good to go. There are no specific settings to enable Personalized Spatial Audio; your new personal profile simply overrides the more generic Spatial Audio experience.

You only need to do this once, regardless of how many different AirPods you use. iOS 16 knows whether you’re using AirPods Max or AirPods Pro and can use what it’s already learned to adjust the acoustics accordingly.

The Personalized Experience

It’s only the first beta of iOS 16, but it sounds like Apple has already nailed Personalized Spatial Audio.

The improvement was instantly noticeable after setting up Personalized Spatial Audio with my AirPods Max. It wasn’t so much that the depth of the audio has increased significantly, but rather that everything now “clicks” in a way that it didn’t before.

The standard Spatial Audio feature was still great with most songs, but there were times when things just felt a little “off” or unbalanced. Sometimes it just felt like there was too much reverb — like I was listening in a large, empty concert hall where the acoustics weren’t quite right.

I’d always blamed that on the individual mixes — and we know that’s true in some cases — but Personalized Spatial Audio fixes nearly all these problems. There’s a more nuanced sound that comes through, especially on the AirPods Max, and it feels a lot more like listening to a set of stereo HomePods (the originals, not the HomePod mini).

The improvement on the AirPods Pro is even more remarkable. While these don’t provide the same depth of sound as the AirPods Max, they suffered more from acoustic artifacts sometimes introduced by Apple’s Spatial Audio rendering algorithms. With Personalized Spatial Audio, these have disappeared almost entirely.

Notably, this doesn’t require any new firmware on the AirPods side since the iPhone does all the Spatial Audio processing. Future AirPods firmware updates may tweak this even more, but the feature works great with the AirPods Max and AirPods Pro as they exist today. All you need is iOS 16, and since Personalized Spatial Audio is already live in the first developer beta, it should be ready to go when the first public betas arrive in a few weeks.