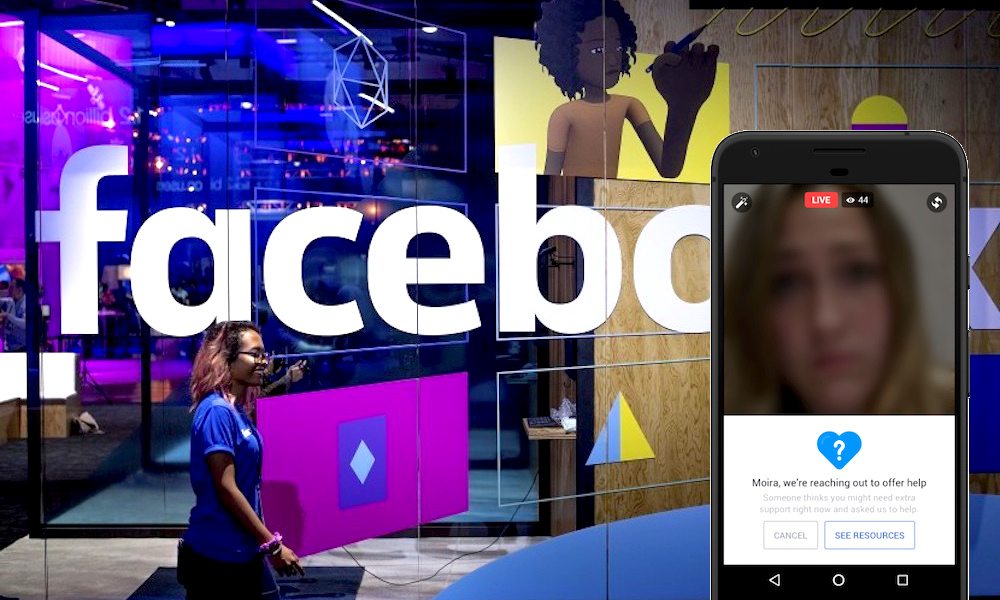

Facebook Rolls out New AI to Identify and Assist Suicidal Users

Toggle Dark Mode

In a blog post published this week, Facebook announced the rollout of its advanced new suite of artificial intelligence (AI)-based software tools designed to not only detect, but also provide assistance to, individuals whose posts encompass suicidal thoughts.

“When someone is expressing thoughts of suicide, it’s important to get them help as quickly as possible,” Facebook’s VP of Product Management, Guy Rosen, explains, while noting that “Facebook is a place where friends and family are already connected, and we are able to help connect a person in distress with people who can support them.”

The new software tools have been implemented as part of the company’s broader push to “identify situations” — timeline posts, uploaded photos, or live video streams — in which a user may hint at or outright suggest having suicidal thoughts or tendencies. For instance, the software is designed to interpret comments on a post identified as “problematic,” with Facebook indicating specifically how comments like “Are You Okay?” may be an indication that something is wrong.

According to Facebook’s explanation, the initiative will function as follows:

- “Using pattern recognition to detect posts or live videos where someone might be expressing thoughts of suicide, and to help respond to reports faster.”

- “Improving how we identify appropriate first responders.”

- “Dedicating more reviewers from our Community Operations team to review reports of suicide or self harm.”

Additionally, Facebook noted that it currently has employees working 24-hours a day, 365-days a year, whose jobs are to specifically monitor the site for posts “suggesting a user might be suicidal.” Moreover, in accordance with its ultimate objective of ‘Getting Our Community Help in Real Time’, Facebook says it’s working closely with local police departments, first responders, and community support groups to help identify and provide assistance to the distressed in a timely manner.

The company claims that its new approach was developed in collaboration with a number of leading mental health organizations, including save.org, the National Suicide Prevention Lifeline, as well as “input from people who have had personal experience thinking about or attempting suicide.”

“With the help of our partners and people’s friends and family on Facebook, we hope we can continue to support those in need.”