Prepping for Communication Safety

Not surprisingly, the iOS 15.2 beta hides a few things in the code that don’t yet appear to be ready for public view, and according to the folks at MacRumors, this time around we’re seeing evidence that Apple is laying the groundwork for its new Communication Safety feature in Messages.

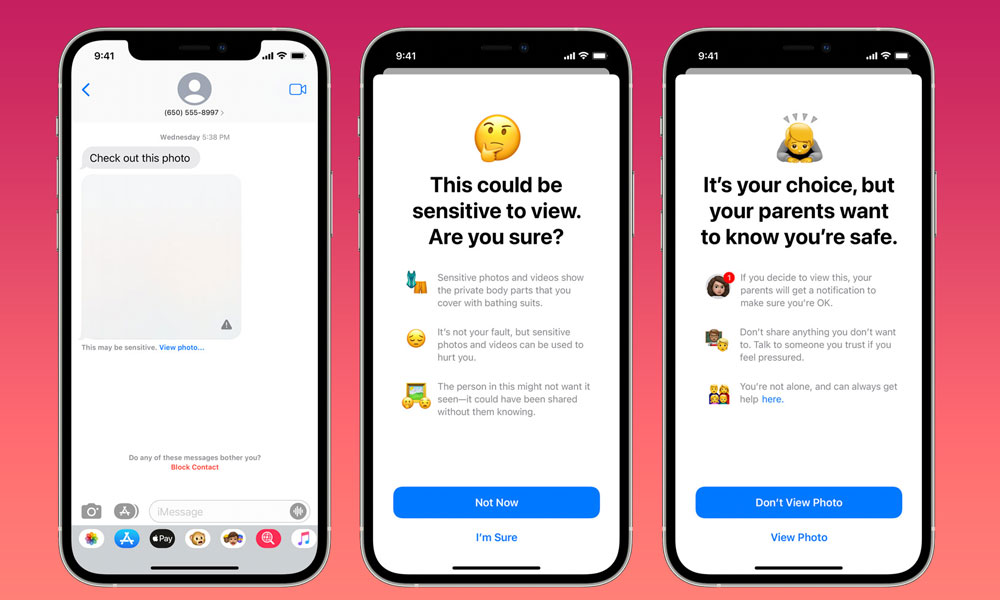

Announced as part of several new child safety initiates back in August, Apple described the Communication Safety feature as an opt-in service for families to help protect kids from sending and receiving sexually explicit images. The idea was that iOS 15 would use machine learning to identify such photos, blurring them out and warning children that maybe they shouldn’t be looking at them.

The child would still have the option to proceed anyway, but kids under 13 would also be told their parents would be notified if they chose to do so. Apple noted that Communication Safety in Message would only be available to users in an Apple Family Sharing group, would only apply to users under the age of 18, and would have to be explicitly turned on by parents before it would be active at all.

The Communication Safety in Messages announcement was accompanied by another significantly more controversial feature, CSAM Detection, which was designed to scan photos uploaded to iCloud Photo Library against a known database of child sexual abuse materials (CSAM).

By Apple’s own admission, it screwed up by announcing all of these features at the same time, since many failed to understand the differences between CSAM Detection and Communication Safety in Messages. Amidst the negative pushback from privacy advocates and consumers alike, Apple announced it would be delaying its plans to give it time to collect input, rethink things, and make improvements.

While last month’s announcement suggested that the entire set of features was being put on the back burner, code in iOS 15.2 reveals that Apple may be planning to implement the potentially less controversial of the two sooner rather than later. Again, however, this is just foundational code, so we’d be careful about reading too much into it at this point. After all, code for AirTags was found in the first iOS 13 betas two years before they saw the light of day.

Note that there’s also been no evidence found of the much more widely criticized CSAM Detection in the iOS 15.2 code. This suggests that Apple may be taking the wiser approach of rolling out the two features separately, thereby avoiding the confusion from August, when many users conflated the relatively innocuous family scanning of kids’ content in Messages with the more serious law enforcement aspects of the CSAM Detection feature.

Communication Safety was intended solely to help parents know what their children were doing, with absolutely no information leaving the family’s devices, while CSAM Detection was intended to look for known child abuse content within users’ iCloud Photo Libraries. It’s fair to say that Apple is going to have to involve many more stakeholders and do its political homework properly before CSAM Detection makes a return — if it makes a return at all.