‘Visual Look Up’ Finally Goes International in iOS 15.4

Credit: Jesse Hollington

Credit: Jesse HollingtonToggle Dark Mode

One of the more unusual limitations in last fall’s release of iOS 15 was the new Visual Look Up feature, which turned out to be available only in the United States at launch. Fortunately, it looks like those outside of the U.S. haven’t had to wait too long, as Apple has quietly expanded it to several other countries with this week’s release of iOS 15.4.

Along with Live Text, Visual Lookup was one of the coolest new features to come to the iOS 15 Photos app, employing the machine learning power of Apple’s newest A-series Bionic chips to accurately identify objects in photos such as pets, plants, and landmarks, and let users pull up more detailed information about them.

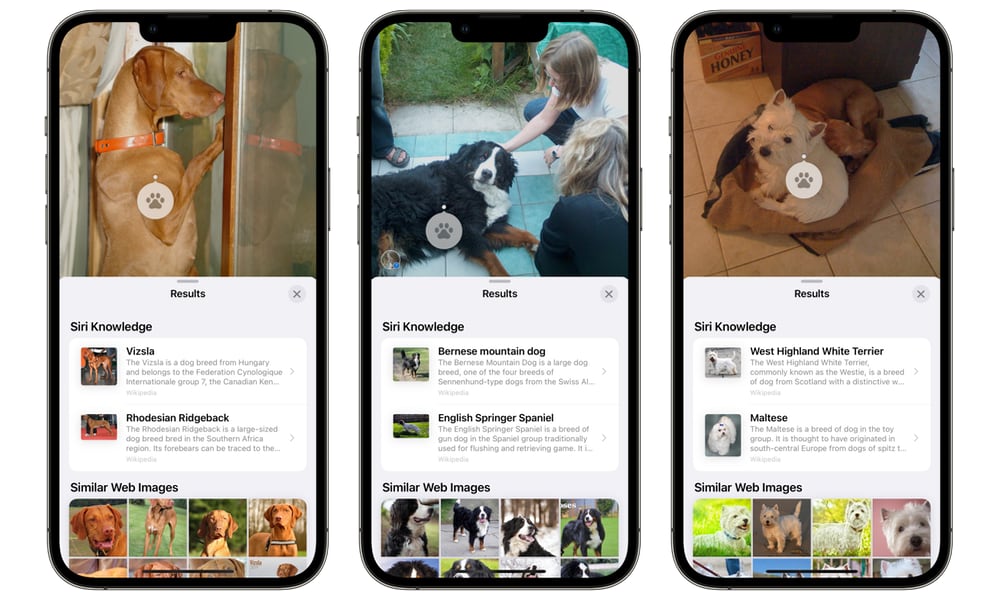

Although the iPhone and iPad have been able to identify these kinds of objects in photos since iOS 10 — and do it all entirely on-device — Visual Look Up takes that to a whole new level. For example, before Visual Look Up came along, your iPhone knew what a picture of a dog looked like, but it couldn’t tell you what kind of breed that dog was.

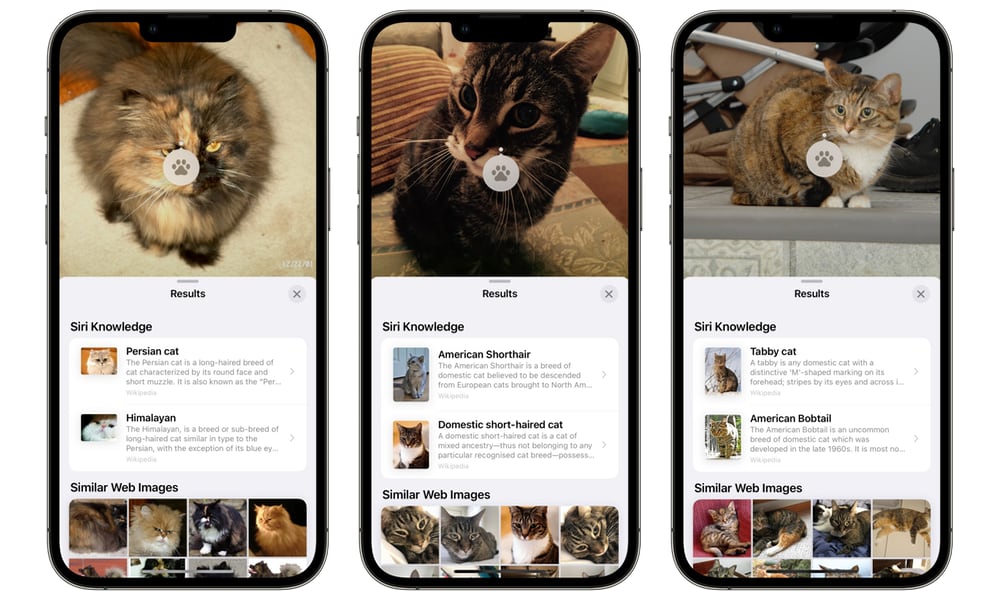

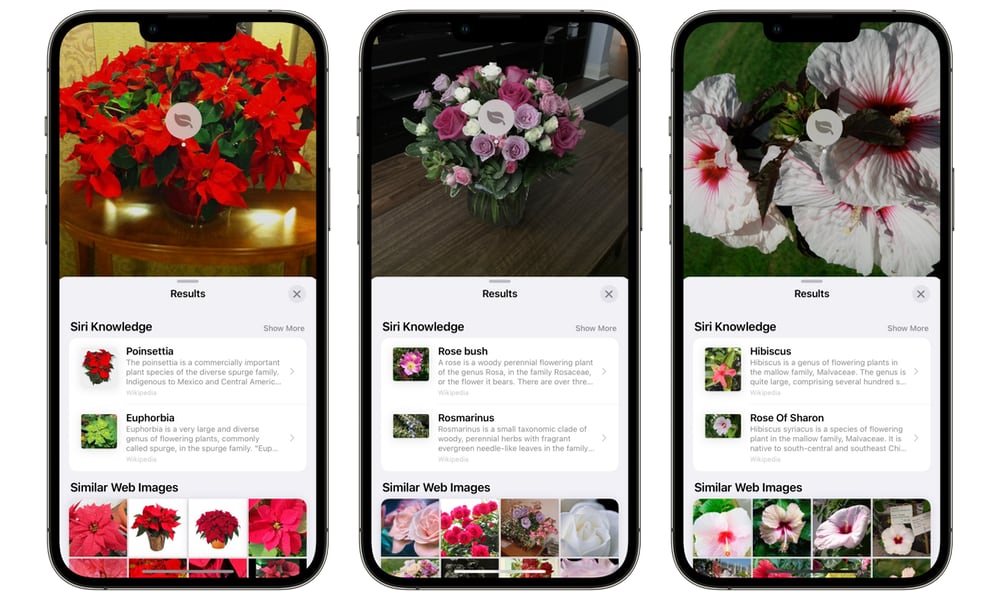

With iOS 15, your iPhone and iPad know the difference between a Bernese mountain dog and a Vizsla, a Persian cat and an American Shorthair, or a poinsettia and a rose bush.

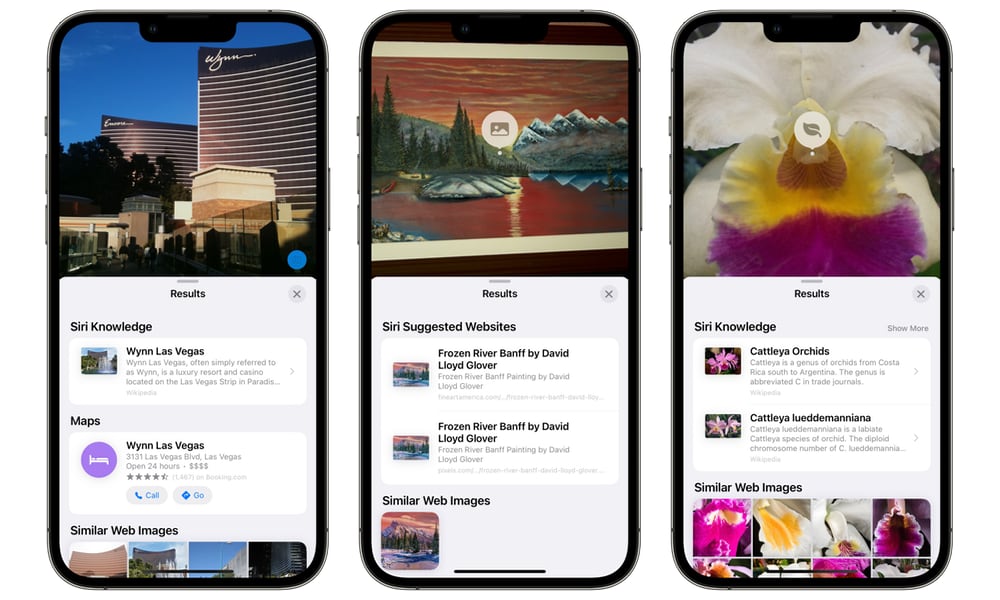

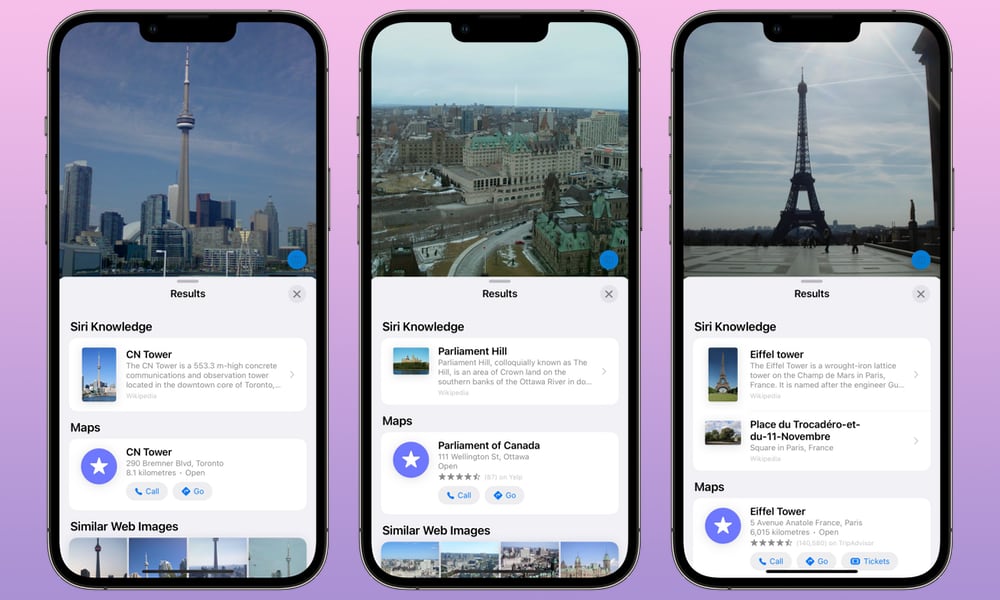

Visual Look Up can also identify many specific landmarks around the world, and it’s not doing this just by looking at where the photo was taken; it’s using machine learning to figure out what many landmarks look like.

While it’s understandable why this may have been initially limited to the English, language, we’re guessing the U.S.-only rollout may also have been to give Apple time to expand its database of landmarks into other countries.

According to Apple’s iOS and iPadOS Feature Availability page, which provides a comprehensive list of where each of Apple’s features are available, Visual Look Up has now been expanded to many other English-speaking countries, as well as into French, German, Italian, and Spanish.

Specifically, Visual Look Up is now available in Canada, Australia, and the U.K., as well as Indonesia and Singapore — as long as the iPhone’s language is set to English. It also works in German in Germany, in Italian in Italy, and in Spanish in Spain, Mexico, and the United States.

How to Use Visual Look Up

Visual Look Up is one of these features that, for the most part, just works. There’s nothing you need to do to switch it on, although users in those countries where it wasn’t available before now likely haven’t experienced it.

Once you’ve updated to iOS 15.4 (or iPadOS 15.4), you’ll be able to locate photos containing recognizable objects — pets, plants, and landmarks are the most common. Although iOS 15 can recognize artwork as well, and it has an extensive database, it can be hit-and-miss unless you’re dealing with a photo that contains a known piece of artwork.

Also note that, just like Live Text, you’ll need an iPhone or iPad with an A12 Bionic chip or later to use Visual Look Up. This was Apple’s first chip with an 8-core Neural Engine; the little two-core version on the A11 Bionic obviously doesn’t have the chops for this.

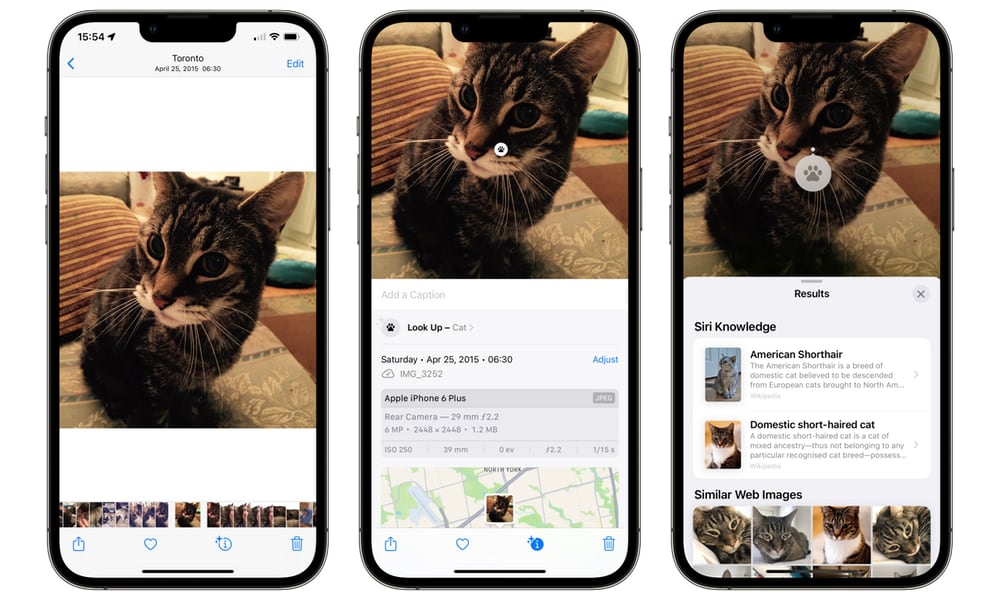

- To check if there’s Visual Look Up information available for a photo, either look for a star in the top-left corner of the “Info” button, or swipe up to bring up the details for that photo.

- An icon will appear over any objects for which you can look up more info, with a glyph denoting the type of object, such as a paw for a pet, or a leaf for a plant or flower. You’ll also see a Look Up option above the normal photo details, noting the type of object detected.

- Tap on either the icon or the “Look Up” line to bring up more details. This will include more specific Siri knowledge showing the breed of cat or dog, the type of plant or flower, or the name of a landmark or work of art. Landmarks will also include an Apple Maps link to their specific location. You may also see Siri Suggested Websites and Similar Web Images shown here as well. You can tap on any of these areas to explore further.

Apple seems to have done a good job of refining Visual Look Up since the iOS 15 betas last summer, but that’s generally been in the direction of making them more precise and exclusive, rather than broadening the categories of what’s supported.

For instance, some iOS 15 betas identified a Holland Lop rabbit as a Shih Tzu. Now, with iOS 15.4, the same rabbit photos don’t offer any Visual Look Up information at all. As far as we can tell, Apple’s definition of “pets” is limited to cats and dogs, so those scouting for clues on more exotic pets like birds, bunnies, and reptiles will be disappointed.

Still, it’s a nice example of what machine learning can do, and we have no doubt that Apple will continue to refine Visual Look Up