Intelligent Input

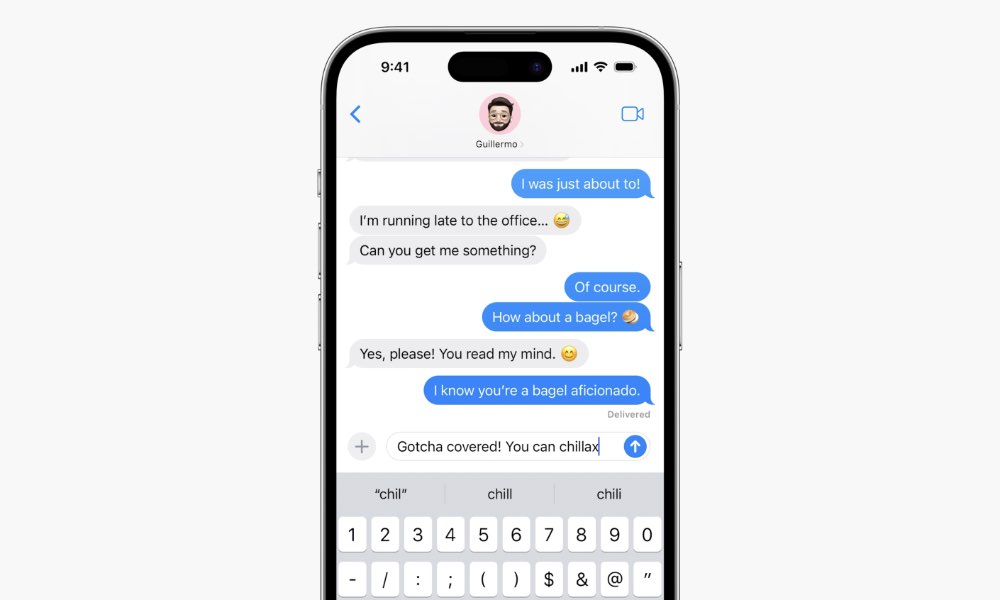

Autocorrect has long been the best and the worst thing about owning an iPhone. The intelligence Apple has baked into its keyboard system is crucial for making a touchscreen keyboard useful rather than frustrating, but as all know, autocorrect doesn’t always get things right.

Fortunately, Apple is doing some work in iOS 17 to improve that, tying it in even deeper to the Neural Engine and its on-device machine learning algorithms to improve word prediction and come up with better results. Thanks to the power of Apple silicon, the iPhone can run a complex transformer language model every time you tap a key.

iOS 17 is also adding sentence-level autocorrection, so it can actually go back and fix a word after you’ve moved on, based on the context of what you’re writing, and not just the individual word. It’s also going to be much less picky about learning certain words; as Apple SVP Craig Federighi said, “when you just want to type a ducking word, the keyboard will learn that too.”

The Neural Engine is also being applied to the Dictation feature, which promises to offer even more accurate speech recognition.