Disturbing Video Technology Makes It Easy to Alter What Someone Says

Credit: YouTube

Credit: YouTubeToggle Dark Mode

Scientists have created a software tool that makes creating a deepfake video of someone speaking now as simple as typing out or editing a few words. That should worry you.

If you aren’t familiar, deepfake is a term for an artificial intelligence-based technology that can essentially make someone in a video appear as if they are saying something else. As you can imagine, that has some incredibly scary implications.

A team of researchers from Stanford University, Princeton University, the Max Planck Institute for Informatics and Adobe Research have created a tool that illustrates just how easily someone could deceptively edit a video.

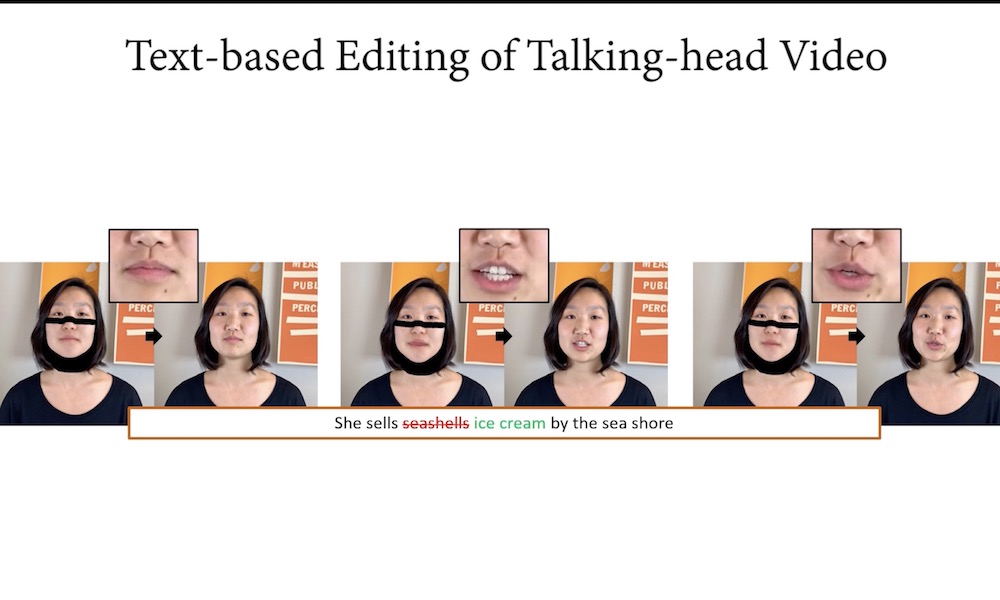

The researchers’ work was detailed in a new white paper titled “Text-based Editing of Talking-head Video.” While the research behind the paper is pretty complex, the proof-of-concept tool that the researchers developed is frighteningly easy to use.

Essentially, all someone has to do is edit the text transcript of a video. By doing so, they can add, delete or completely change the words that a person in speaking. As a result, the “talking head” in the video can be made to say just about anything.

You can see an example of the scientists’ work and the resulting tool in the video below.

Of course, an edited video isn’t automatically concerning unless the viewing audience thinks it is legitimate. To test how convincing the deepfakes were, the researchers also ran tests by showing the videos to a group of 138 volunteers.

Around 60 percent of the participants thought the deepfake videos were real. That doesn’t sound too high, until you realize that only around 80 percent thought the original, unedited clips were legitimate.

Before you get too worried, it’s worth going over some of the tech’s limitations. For now, they only work on talking head-style clips. The tool also can’t change the tone of voice or mood of the speaker without “uncanny results.” If someone does something as simple as move their hands while speaking, it could also derail the technology.

Also, currently — and perhaps thankfully — the tool isn’t available to the public. In a blog post, the researchers note that there are some serious ethical considerations of releasing it.

While such technology could have legitimate uses, such as fixing flubbed lines in a movie, it could also have some dangerous consequences since, of course, it won’t just be used for legitimate purposes. Deepfakes and other edited videos are often used in viral smear campaigns against politicians and other public figures.