Apple’s Changing the Game for Those with Limited Hearing, Saving Aural Health for Everyone Else

Credit: Nikias Molina

Credit: Nikias MolinaToggle Dark Mode

Apple’s making motions to become an all-inclusive tech company. It doesn’t matter if you have a disability or not; Apple’s got you covered.

In the past, we saw Voice Control, a feature that helped you control your iPhone using only your voice. We also saw the Noise App, introduced in watchOS 6, which lets you know if your environment’s volume can damage your hearing after long-exposure.

With the introduction of iOS 14 and watchOS 7, the company is still finding ways to help people with different capabilities worldwide.

This time, its primary focus is hearing difficulties.

New features, such as Sound Recognition and Sign Language Detection, will be available for everyone this fall on their iPhones and Apple Watches.

Granted, you can try these features right now if you already downloaded the beta version of iOS 14 and watchOS 7. Regardless, here’s every new accessibility feature available you can try.

Excessive Volume Warnings

Apple’s concerned about the noise we hear every day. That’s why the company created the Noise app to protect your hearing from the sounds around you. Now, the company is taking a different approach and protect you from the noise coming from your headphones.

Now, the hearing features in your iPhone and Apple Watch will help you control the decibels you’re exposed to each week and will turn the volume down if needed.

The World Health Organization, or WHO, says that a person can be exposed to 80 decibels of noise for about 40 hours a week without impacting your hearing. Anything more than that can cause damage to your hearing abilities in the long run.

To prevent this, your iPhone and Apple Watch will let you know once you exceded the 80 decibels mark in a week. Once you surpass this mark, you’ll get a notification saying that the volume will be turned down.

Not only that, but you can also keep control of your headphone usage and how many decibels you’ve been exposed to with the Health App. Based on this feedback, you can also change the maximum volume for your headphones.

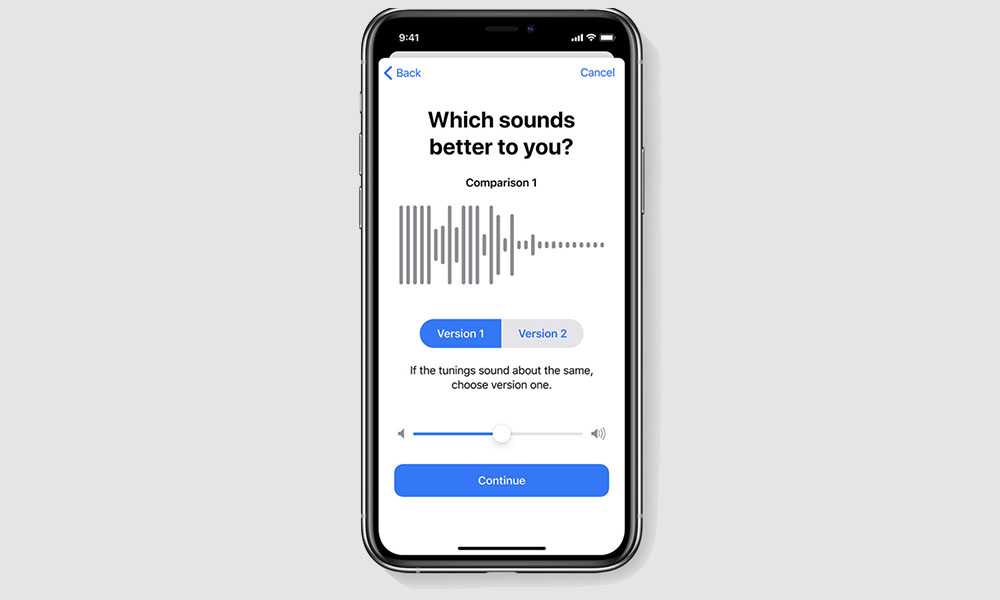

Headphone Accommodation

Another cool new accessibility feature is Headphone Accommodation. Now, you can basically personalize the hearing experience from your headphones.

The goal of Headphone Accommodation is for the user to hear subtle and soft sounds better. Whether it’s from a song or a phone call, you’ll be able to improve the volume based on your personal needs.

Keep in mind, though, this feature is available on headphones that use Apple’s H1 Chip. This chip can be found on the second-generation AirPods, AirPods Pro, and some Beats headphones, including the Powerbeats Pro.

Sound Recognition

Sound Recognition does pretty much what you would expect. Once it is set up, your iPhone will be able to hear its surroundings and identify certain sounds.

If your iPhone picks up a sound that it recognizes, like a doorbell or a baby crying, it will notify you on your iPhone and Apple Watch.

This is an amazing feature that should help not only people with hearing problems but also people that have their headphones on and are focusing on something else.

Although, it’s worth mentioning that you shouldn’t completely rely on this feature for emergencies. As Apple states, you should not rely on this feature for situations that may cause you harm. Nevertheless, it’s still a cool feature and a welcomed addition to the iPhone.

There are many sounds the Sound Recognition already knows, and you can set up which sounds you want your iPhone to hear. Also, if you keep receiving many notifications, you can also snooze them for up to 2 hours.

And just in case you were wondering, Apple says that the Sound Recognition feature uses in-device technology, which is another way to say that Apple is not always listening or storing any sounds the iPhone hears.

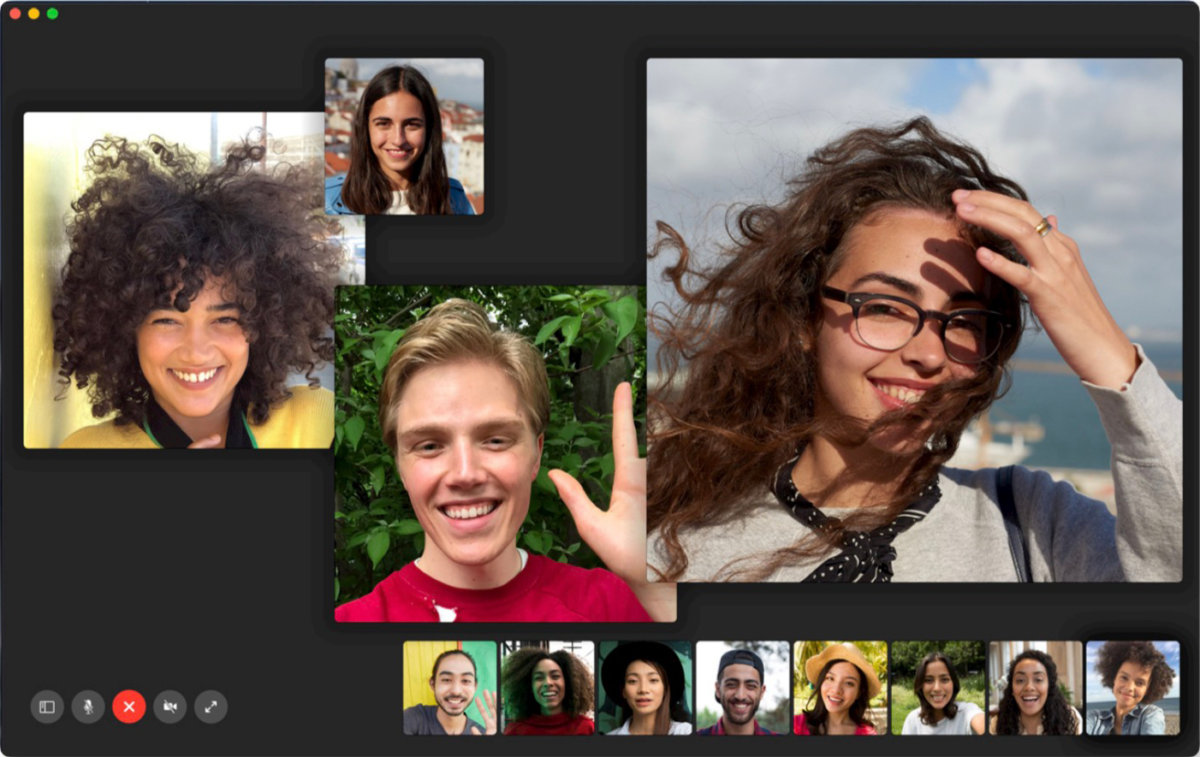

Sign Language Detection on FaceTime

FaceTime helps many people who communicate with sign language to talk to friends, family, and even during work meetings. You can easily communicate without relying solely on messages.

That being said, there used to be an issue when doing a group FaceTime. Before, when you were on a group call, the person talking would have a bigger window so everyone could focus on them. While this is useful, the people who use sign language couldn’t be seen clearly if there were too many people on the same call.

Apple thought of that and fixed it in the latest software updates. Now, if you’re on a FaceTime call, your iPhone will recognize that you’re using sign language and will give you priority on the call. This means you’ll always have a bigger window so it’s easier for everyone to communicate with you. It’s one of the most inclusive features that’s coming to iOS 14 and hopefully, we’ll see other companies using a similar feature.