Apple’s Visual Intelligence

Before looking for third-party apps to help you recognize something, you need to know that if you have an iPhone 15 Pro or iPhone 16, you may already have everything you need, thanks to Apple Intelligence.

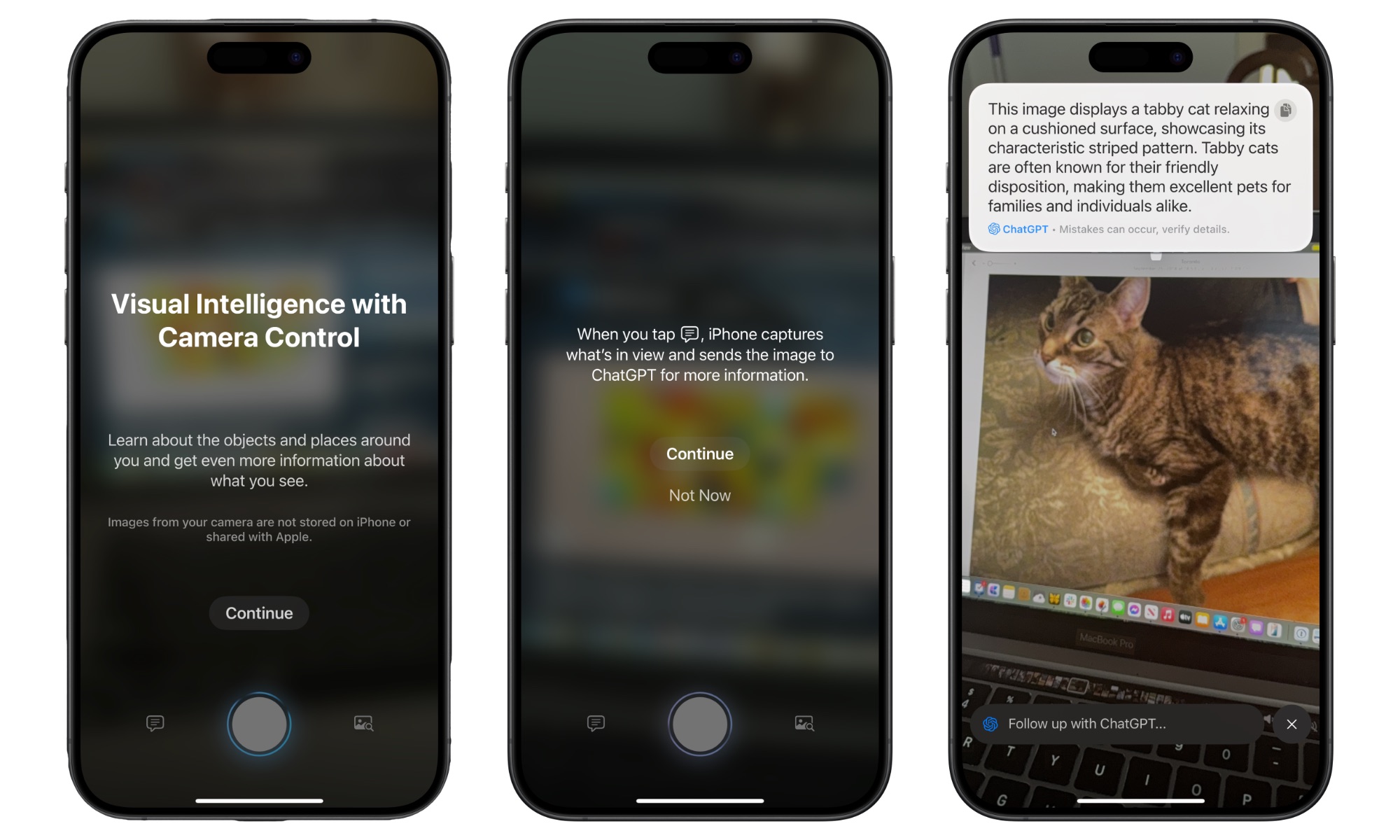

Apple introduced a new feature in iOS 18.2 called visual intelligence. This technology helps you use your iPhone’s camera to find more information about anything. For instance, you can find the menu of a new restaurant you walked by or the name of a plant you see in the park.

Visual Intelligence uses both Google and ChatGPT to help you discover more information about anything. You can use Google to find a product you want to buy or to find out when a store opens and closes. You can also get more personalized information by asking ChatGPT for more information about what’s on your camera.

When it launched, this feature was limited to the iPhone 16 lineup due to its reliance on the Camera Control that’s unique to those models. However, when Apple released the iPhone 16e in February without a Camera Control, it made visual intelligence accessible from the Action button. That opened the door for its expansion to the iPhone 15 Pro and iPhone 15 Pro Max in iOS 18.4. Visual intelligence can be called up with a long press of the Camera Control on iPhone 16 or iPhone 16 Pro models, or assigned to the Action button or added as a button in the Control Center on any supported iPhone

So long as you have a compatible iPhone, you can use this technology without downloading a third-party app.