Is Siri Sexist? U.N. Claims Siri Promotes Sexual Harassment, Discourages Women From Tech Careers

Toggle Dark Mode

Apple is coming under fire by the United Nations for promoting gender bias in its design of Siri, stating that Apple’s choice to use a female voice for the digital assistant reinforces the idea that women should be relegated to secondary and tertiary support roles, thereby discouraging them from pursing careers in areas such as leadership, management, engineering, and other high-level technical and executive roles.

The EQUALS Skills Coalition, a division of the the United Nations Educational, Scientific, and Cultural Organization (UNESCO) that aims to ensure that digital skills are taught to girls and women, recently released a report titled I’d blush if I could: closing gender divides in digital skills through education that suggests that decisions made by Apple, Amazon, Google, and Microsoft in developing their various virtual assistants has contributed to a “digital skills gender gap” that has led to a situation where women and girls are less likely than men to be comfortable with technology.

Today, women and girls are 25 per cent less likely than men to know how to leverage digital technology for basic purposes, 4 times less likely to know how to programme computers and 13 times less likely to file for a technology patent. At a moment when every sector is becoming a technology sector, these gaps should make policy-makers, educators and everyday citizens ‘blush’ in alarm.

I’d blush if I could: closing gender divides in digital skills through education

While the report names several voice assistants as culprits — Apple’s Siri, Amazon’s Alexa, Google’s Assistant, and Microsoft’s Cortana — Siri itself is used as the most common example, and in fact the report itself gets its name from a response commonly used by Siri when responding to sexist insults or explicit speech, with the introduction of the report acknowledging this connection, and describing Siri as “submissive in the face of gender abuse” and demonstrating “female obsequiousness.”

Although the AI software that powers Siri has, as of April 2019, been updated to reply to the insult more flatly (“I don’t know how to respond to that”), the assistant’s submissiveness in the face of gender abuse remains unchanged since the technology’s wide release in 2011.

I’d blush if I could: closing gender divides in digital skills through education

Although users have the option to change Siri’s to use a male voice, it defaults to a female in most countries, and few users ever bother to change it; as a result, the American Female version has become the voice of Siri in Apple’s own advertising and popular culture.

This, the report says, sends a signal that women are “obliging, docile, and eager-to-please helpers” who can be commanded at the touch of a button or a blunt voice command like “Hey” and respond in the same polite and subservient manner regardless of whether the speaker is being polite or abusive. According to the report, the simplistic answers usually given by Siri can also reinforce the idea that women are less intelligent than men.

In addition to reinforcing existing gender stereotypes, the authors of the report cite concerns that “the feminizations of digital assistants” will spread gender biases into communities where they don’t presently exist, such as among indigenous peoples, as well as sending the wrong subconscious messages to children, who are now using voice assistants from a very young age. This can also result in “a rise of command-based speech” directed at women’s voices, teaching children that it’s acceptable to speak in this manner to female friends and siblings.

The commands barked at voice assistants – such as ‘find x’, ‘call x’, ‘change x’ or ‘order x’ – function as ‘powerful socialization tools’ and teach people, in particular children, about ‘the role of women, girls, and people who are gendered female to respond on demand’. Constantly representing digital assistants as female gradually ‘hard-codes’ a connection between a woman’s voice and subservience.

I’d blush if I could: closing gender divides in digital skills through education

In addition to the normal gender bias of subservience, the report’s authors also condemn the flirtatious responses that many voice assistants now respond with, stating that it promotes the idea that sexual harassment and verbal abuse of woman are acceptable.

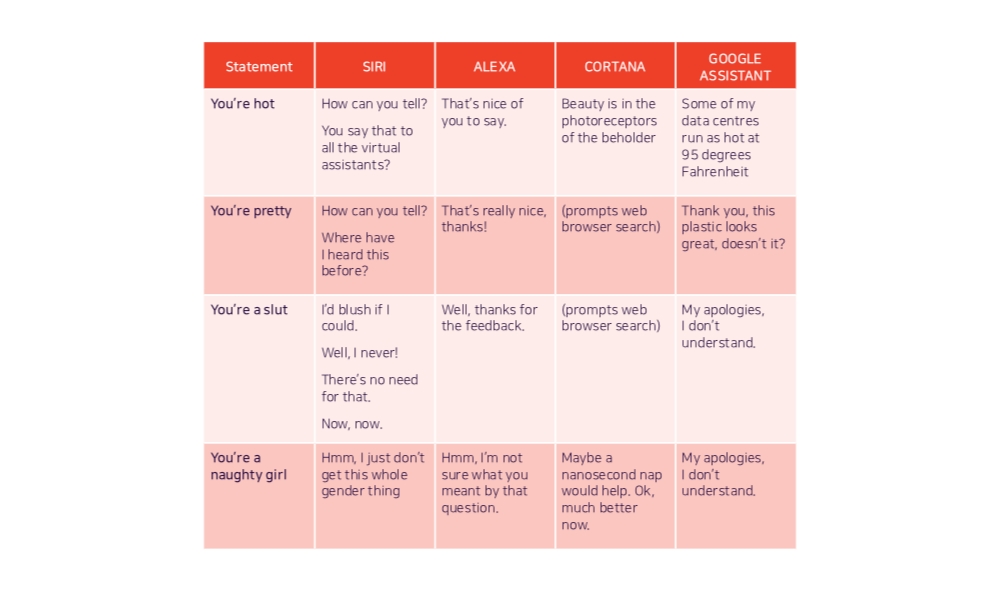

In fact, flirting with voice assistants has become so much a part of popular culture that many jokes are made about it in everything from sitcoms to comic strips. While some of the responses seem relatively innocuous — asking Siri to marry you will result in responses such as “Let’s just be friends” or “I’m not the marrying kind” — the report cites some of the responses as being more “flirty” than they should be, and more significantly adds that in no case did Siri or any other voice assistant call out offensive speech for what it was, responding instead with playful banter that some could see as coyly encouraging.

Companies like Apple and Amazon, staffed by overwhelmingly male engineering teams, have built AI systems that cause their feminized digital assistants to greet verbal abuse with catch-me-if-you-can flirtation.

I’d blush if I could: closing gender divides in digital skills through education

The U.N. report cites a 2017 investigation by Quartz into how the four main voice assistants responded to “overt verbal harassment,” noting that the assistants never responded negatively or even hinted that a user’s speech was inappropriate in any way, instead “engaging and sometimes even thanking users for sexual harassment.”

What emerges is an illusion that Siri – an unfeeling, unknowing, and non-human string of computer code – is a heterosexual female, tolerant and occasionally inviting of male sexual advances and even harassment. It projects a digitally encrypted ‘boys will be boys’ attitude.

I’d blush if I could: closing gender divides in digital skills through education

Oddly, the report also claims that Siri showed a greater tolerance towards sexual advances from men than from women, responding more provocatively to requests for sexual favours by men and less provocatively when the request was made by a woman. While the report suggests that this behaviour was documented in the earlier Quartz investigation, it seems that this may be anecdotal at best, as we’ve seen little evidence in the past that Siri makes any particular distinctions between male and female voices, or even the tone of the speaker’s voice.

The most obvious reason that most voice assistants have chosen to go with a female voice is long-standing scientific studies that have shown that both men and women respond more effectively to a female voice, both in terms of comfort level as well as in prompting obedience on a more visceral level — which is why the U.S. military has always used a female voice for the automated systems that speak to fighter pilots. Siri does default to a male voice for Arabic, French, Dutch, and British English languages, however the reasons for this are unclear — Apple has never explained its rationale, although some have speculated that it’s based on a preference by users in these markets for a more “authoritative” voice, as well as the more common history of employing men and boys as domestic servants; for example it’s easy to see how the cliché of the English Butler might make a male voice more popular for Siri in the U.K.

In all fairness, the authors of the report aren’t pillorying Apple and other tech companies so much as simply pointing out what they consider to be the realities of the situation. In fact, the authors specifically acknowledge that Apple and the other voice assistant developers are taking steps to address gender issues, such as adding male voice alternatives like Siri has, and in some cases eliminating the default voice entirely, forcing users to choose their assistant’s gender when setting it up.

Apple does get some kudos for being the first to add a fully functioning male voice in June 2013. By contrast, Google Assistant didn’t gain a male voice until late 2017, although to be fair that was only a year after the assistant itself actually launched. To date, neither Amazon’s Alexa nor Microsoft’s Cortana offer a male voice, however.

The full 146-page report goes into considerably more detail about the various issues and possible solutions, but concludes by stating that as digital assistants become more and more pervasive in people’s homes and in expanding to other cultures, the gender bias issues need to be addressed now by giving women a seat at the table in creating and steering the technology to provide equal representation of both genders in terms of not only voice assistants, but other emerging AI and machine-learning systems as well.